Azure Virtual Desktop

With the release of Azure Stack HCI 23H2 in February 2024, Microsoft also made Azure Virtual Desktop (AVD) on Azure Stack HCI generally available. AVD is Microsoft’s Azure-based VDI solution, providing access to Windows desktops and applications from any device with internet access. The shift to hybrid working patterns over the past few years has led to a rapid growth in VDI needs, and AVD has been a key player in this space. In addition to being optimized for Microsoft 365 applications, AVD uniquely provides access to Windows 10 and Windows 11 multi-session experiences.

Windows Multi-session

Windows multi-session is a feature in Azure Virtual Desktop that allows multiple users to access the same Windows 10 or 11 session host simultaneously. This enables significantly more optimized resource usage of the underlying physical infrastrucutre, with much higher density of user sessions than is possible with 1:1 mapping of user to session host. Historically in VDI solutions, the only way to implement multi-session has been with a Windows Server experience. The challenge with a Windows Server experience is that client applications can operate unpredictably when compared to running in a native Windows 10 or 11 client environment. From a cost perspective, this also enables the use of per-user Windows Licensing instead of RDS CALs, and spoiler alert, most users are already licensed for Windows 10/11 through Microsoft 365 licensing.

AVD on Azure Stack HCI

With the growth of AVD over the last few years, some of the more challenging scenarios encountered in AVD have moved from rare edge cases into a growing set of needs and requirements. A few of those considerations include:

-

Data Sovereignty and Compliance: Some organizations face strict regulatory requirements that mandate data and applications remain within specific geographical or jurisdictional boundaries. Azure Stack HCI allows businesses to meet these compliance and data sovereignty requirements by keeping the AVD infrastructure on-premises.

-

Performance and Latency: For operations that require low latency, hosting AVD on Azure Stack HCI reduces the distance data travels compared to public Azure. This proximity can significantly enhance performance, especially for time-sensitive applications and services.

-

Customization and Control: Azure Stack HCI provides more direct control over the hardware and networking environment. Organizations that need specific configurations or have specific needs that aren’t provided in the rigid world of public cloud might prefer the customizable nature of an on-premises solution.

-

Connectivity and Reliability: In areas with unreliable internet connectivity or where bandwidth is limited, relying on a cloud service like Azure might lead to performance issues or complete drop outs in connectivity. Azure Stack HCI enables a more stable and predictable AVD experience under these conditions.

-

Cost Management: While Azure offers scalability and flexibility, the operational costs can be unpredictable due to fluctuating usage and bandwidth needs. For organizations with stable and predictable workloads, Azure Stack HCI can be more cost-effective by leveraging existing infrastructure and avoiding cloud-based data transfer fees.

-

Integration with Existing Infrastructure: For businesses with significant investments in on-premises infrastructure, Azure Stack HCI allows them to extend and utilize these assets alongside AVD, providing a hybrid approach that bridges on-premises and cloud environments effectively.

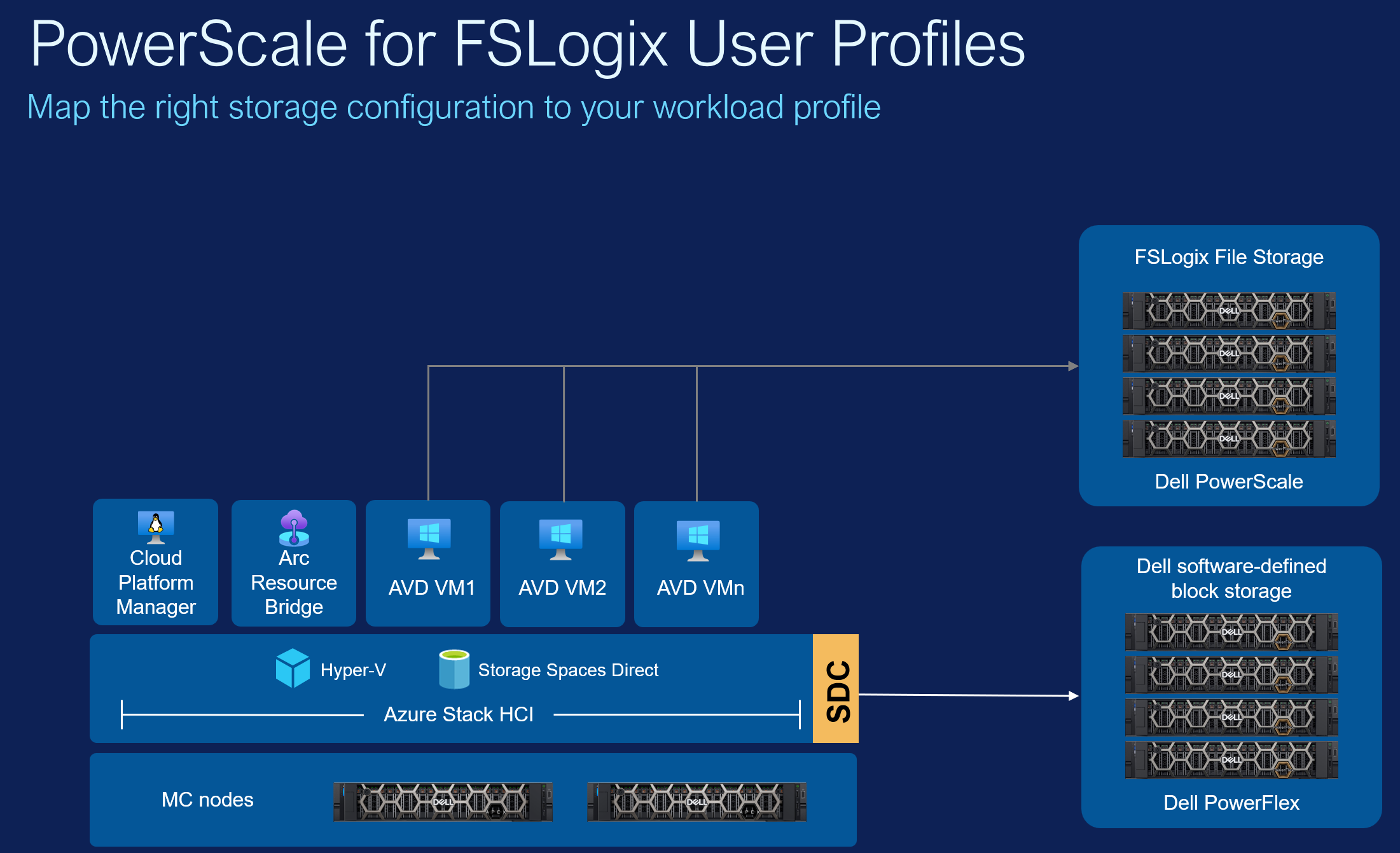

Design Guide and FSLogix Profiles

While AVD on Azure Stack HCI was in preview, the Dell VDI Solutions team put together a fantastic sizing and design guide for AVD on HCI. While the highlights are well worth reading - over 400 user sessions per physical host via LoginVSI testing, say whaaaaaaaaat?! - there was one element I thought could be improved upon.

When implementing user profile management in AVD, FSLogix is the de facto standard, and in fact comes both free of charge with AVD and pre-installed on the Azure Windows 10/11 multi-session marketplace images.

Well good news - free of charge with AVD includes AVD in Azure Stack HCI, and the same Windows 10 and 11 multisession images used in Azure are available to Azure Stack HCI users as well. The question now is how to implement FSLogix.

FSLogix has but one core requirement - an SMB share in which to host the VHD images which hold user profiles. Often in Azure this is done in Azure Files. On-premises, there is no Azure Files service. You could still use Azure Files if you wanted, but the latency and resultant lag from mounting a VHD profile over a remote connection is likely to make this a very poor user experience, and kinda goes against a lot of the point of having AVD on premises in the first place. So then the typical way we see to provide this is by creating a Windows File Server or File Server cluster running inside Azure Stack HCI, and sharing out a file share over SMB. This approach does come with some drawbacks.

- It takes away precious compute resource from your VDI workloads. AVD Session Hosts and FSLogix file servers will compete for resources, and while this may not be visible all the time, during peak usage it becomes more and more likely to rear its head as an issue.

- Single point of failure. The major benefit of FSLogix is that profiles roam between session hosts. By placing FSLogix profiles on the same physical infrastructure as the session hosts, the ability for it to roam those profiles to session hosts elsewhere in the event of a disaster is removed. One of the core value props of FSLogix is cut out of the solution. You could work around this with a cloud cache, but again, this goes somewhat against the point of running AVD on-premises.

- Scalability challenges. As the AVD service grows or changes through years of operation, so too will the user profile needs change. By tying the two elements together, we create interdependencies which lead to scalability design challenges for both in the future.

So what’s the solution? The Dell VDI design guide was almost there - a separate Hyper-V cluster was deployed just to run a Windows File Server to host FSLogix profiles. But isn’t that unnecessarily complex? This is literally the job NAS devices were created to cater for - robust, proven, resilient, high performance, and highly storage efficient network attached storage to serve file shares to one or multiple clusters.

Deploying the Solution

To prove out the solution using a tried and tested scale-out NAS device - PowerScale - we first deployed a PowerScale appliance in our lab, and connected it to the same physical switches as our APEX Cloud Platform for Microsoft Azure cluster. I won’t bore you with detailing that deployment, but if you want to try out a virtual version for yourself, the PowerScale simulator is available as an OVA here with deployment guide here .

As a slight aside here, I should note that there are different levels of integration for various types of storage, and while Dell PowerFlex required significant engineering investment from Dell and Microsoft to support as external block storage for Azure Stack HCI clusters, because PowerScale integrates directly at the VM level, no additional engineering effort or validation is required to enable this new scenario. It’s a workload VM talking to file storage, so is already fully a supportable solution.

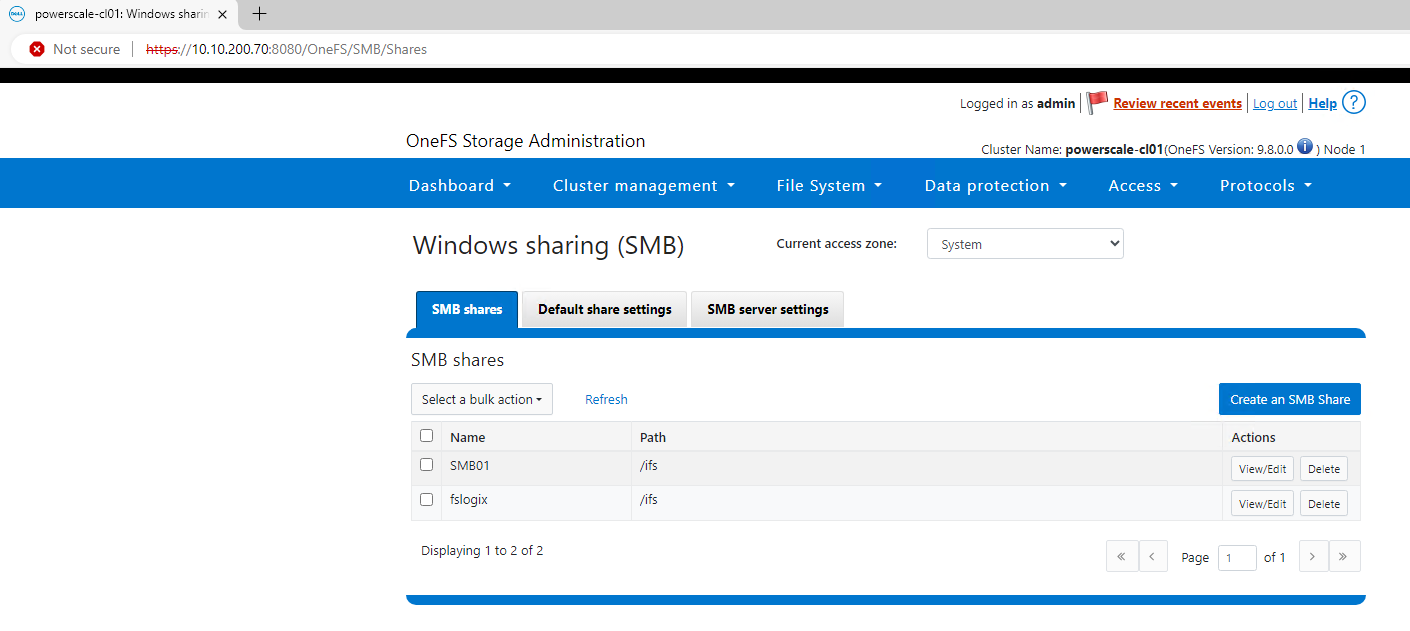

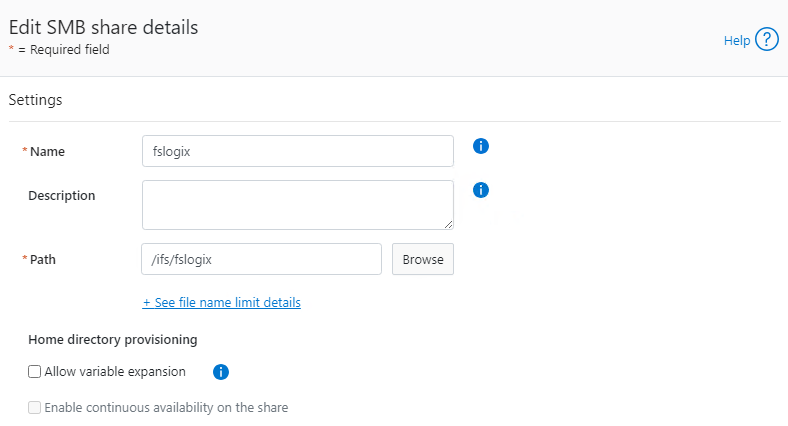

Step one after deploying our PowerScale is to set up an SMB share for FSLogix profiles to live within. We do this simply within the PowerScale web UI with a few clicks.

The SMB share will live within /ifs/fslogix.

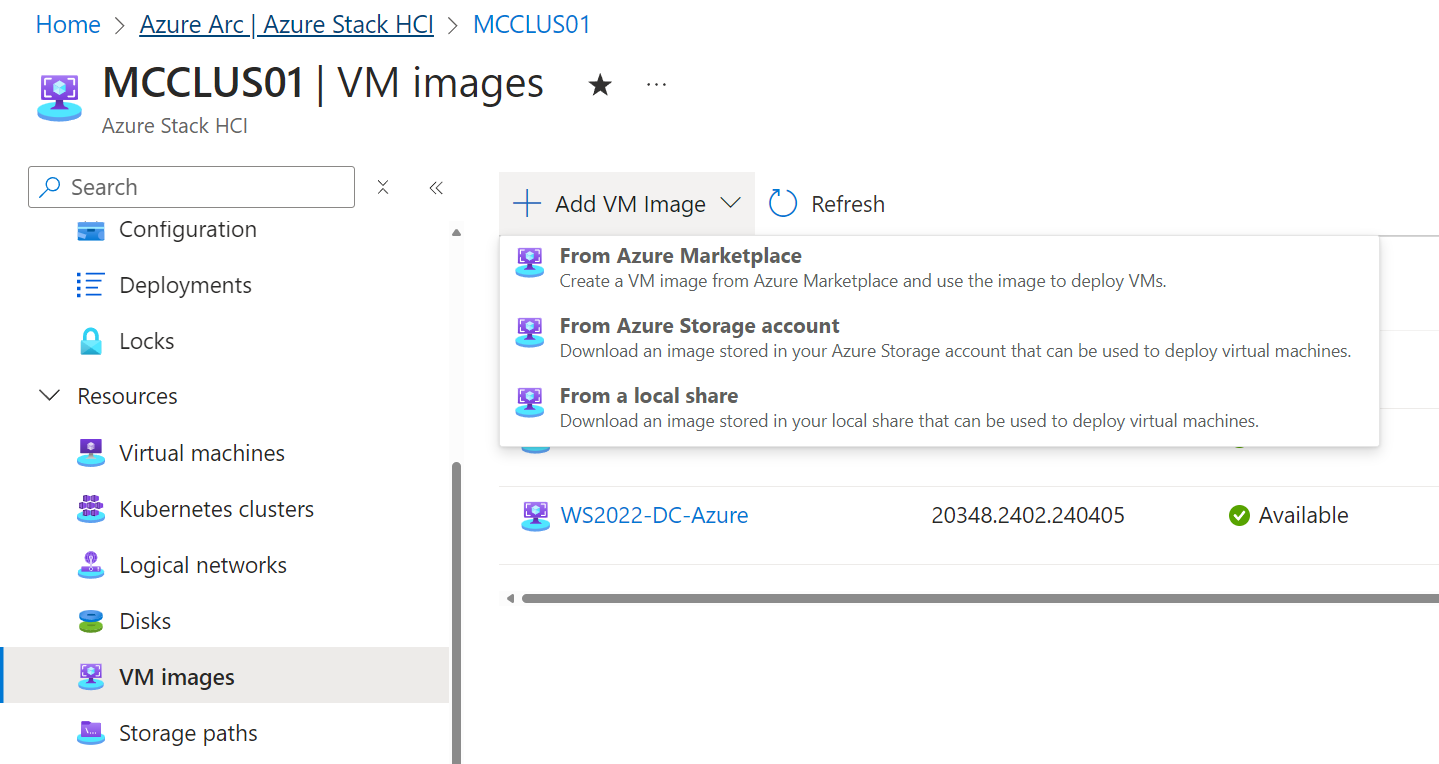

Now we need to configure our AVD settings to a) use FSLogix, and b) point to the correct path. We’re going to make use of the Windows 11 multi-session Azure Marketplace image to do so, as it has the FSLogix bits already installed on it, so step one is to navigate to the Azure Stack HCI cluster in the Azure Portal, go to the VM Images path, and Add a VM Image.

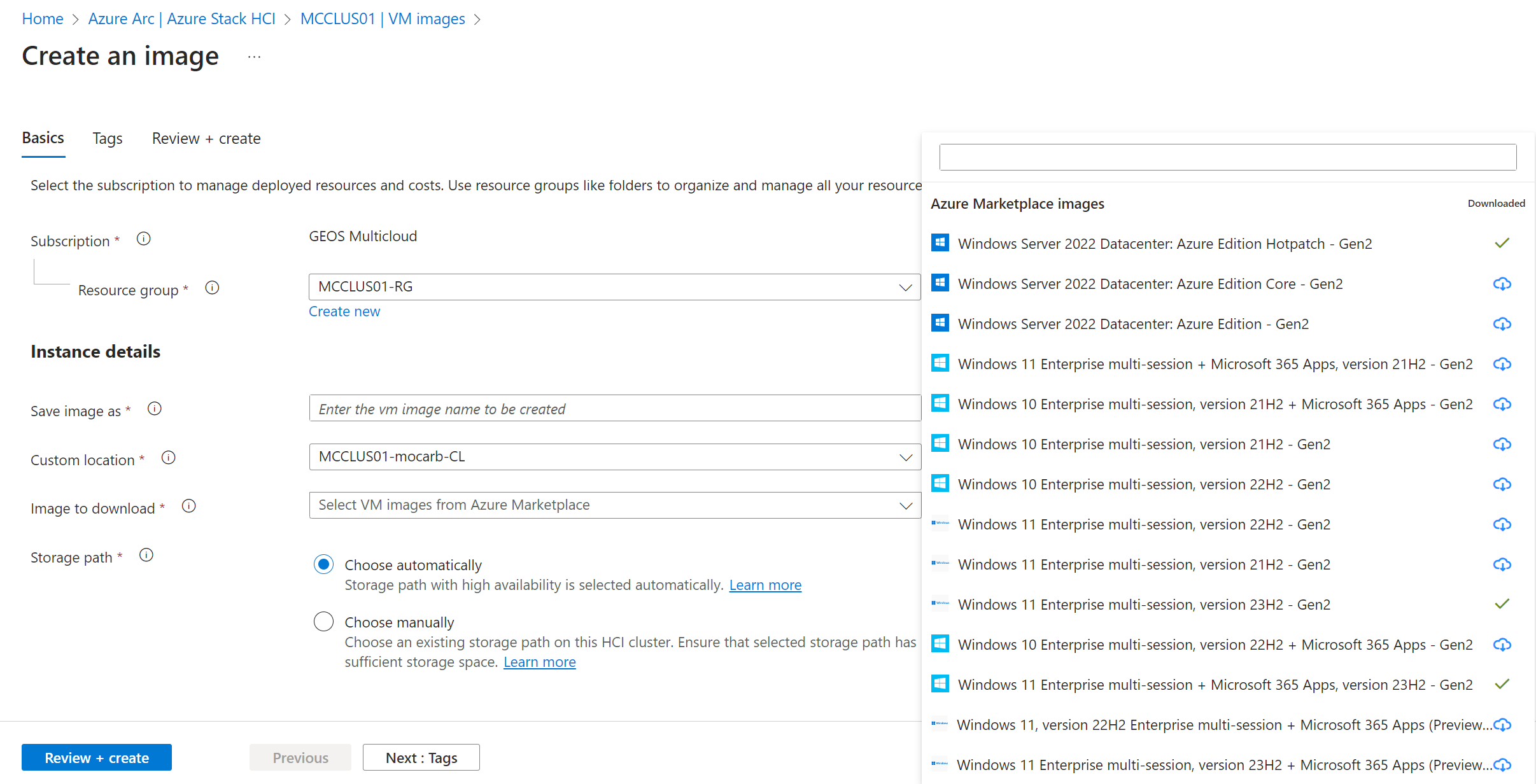

There are a whole bunch of images to choose from, here we’ve gone with the latest and greatest Windows 11 Enterprise multi-session 23H2 with M365 Apps included.

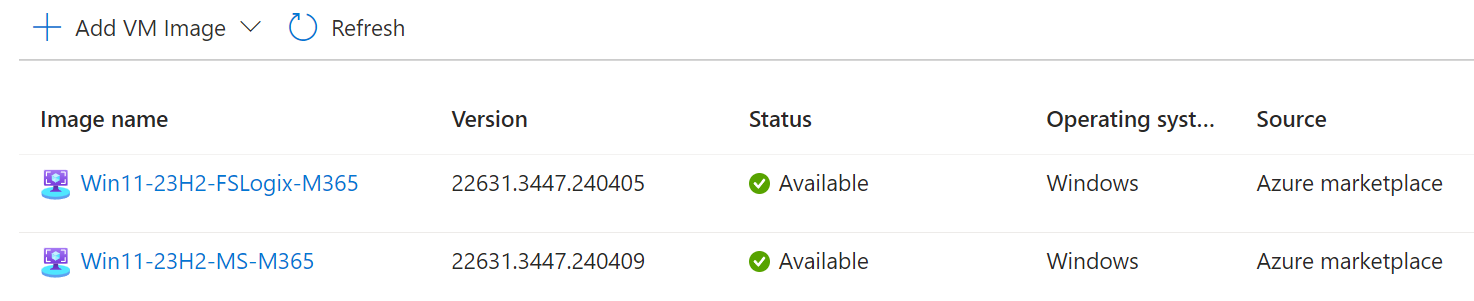

The marketplace image will download into your Azure Stack HCI cluster, and once completed will show as available within the VM Images as so:

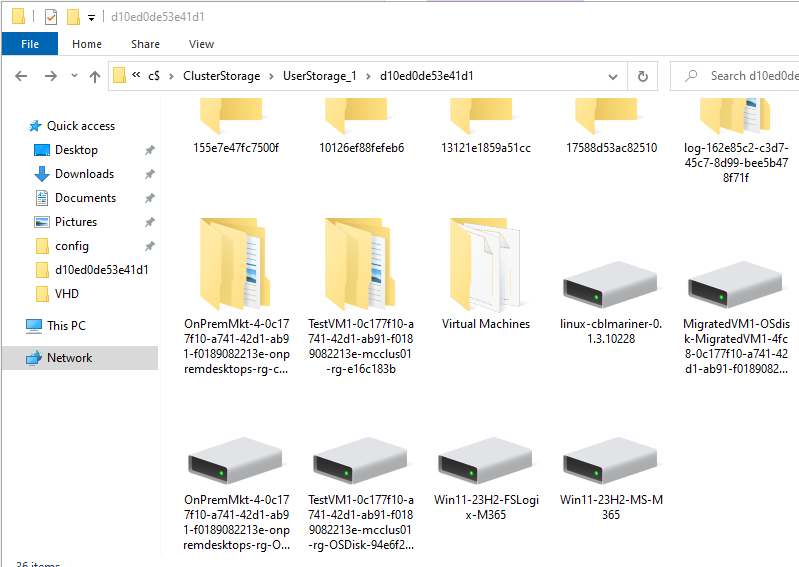

If you then browse to the storage path you chose to store this on in your Azure Stack HCI cluster (in my case C:\ClusterStorage\UserStorage_1), you’ll be able to view the VHD that was created.

This is all well and good, but we want this configured to use PowerScale for FSLogix profiles automatically, and right now none of those settings are contained in the VHD image. In order to sort this, we’ll do a two step process. Step one, we need to mount the VHD as a local drive so we can browse its contents. To do this, I first copied it to a temporary location elsewhere on my network, so I wasn’t trying to mount it direct from the Azure Stack HCI CSV.

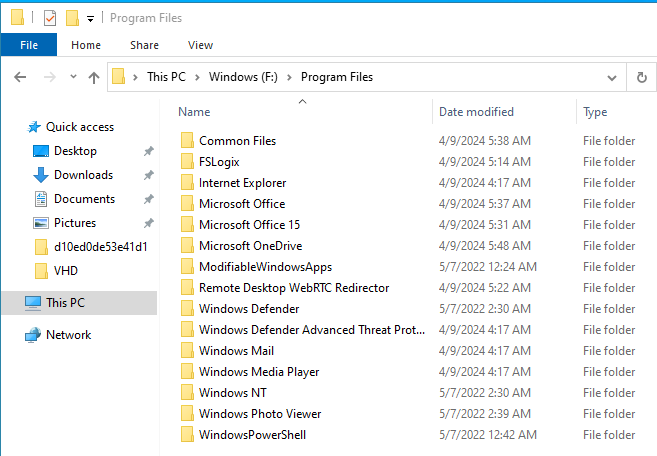

With the VHD mounted as F:, we are now free to explore its contents. We can observe here that FSLogix is indeed installed in this marketplace image out of the box. Nice.

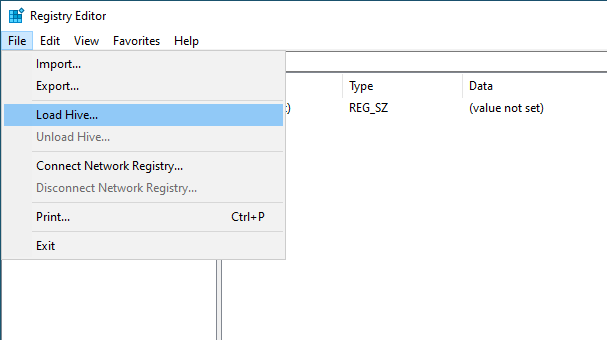

The next step is to update the Registry inside the VHD image to point to our PowerScale file share. To do this, open up the Registry Editor, click into HKLM, and select File, Load Hive

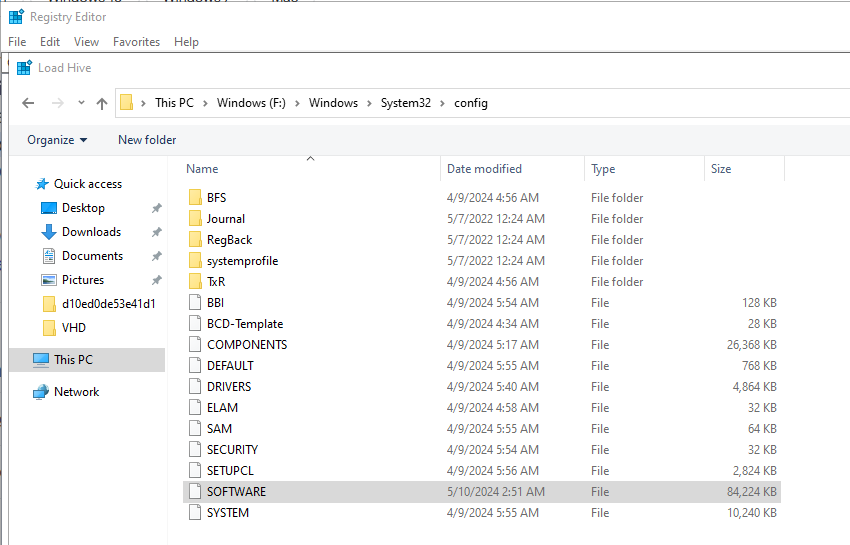

You’ll need to open up the SOFTWARE hive from the VHD mount point, in my case this is in F:\Windows\System32\config\SOFTWARE.

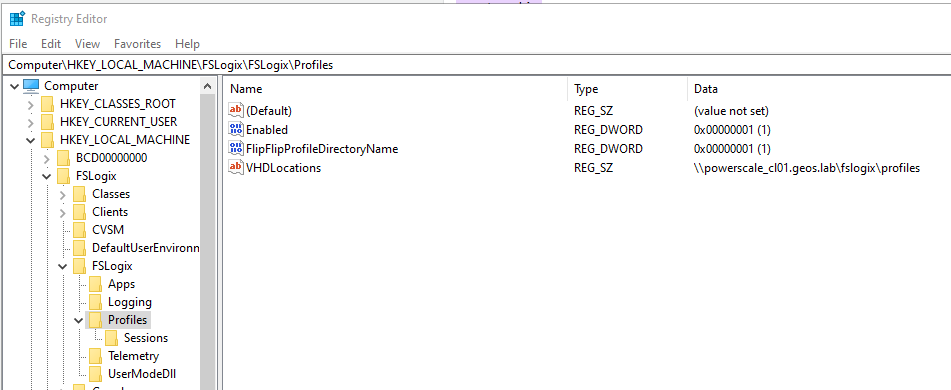

Expand out FSLogix, Profiles, and create the registy keys to enable FSLogix. Set a DWORD of Enabled to 1, and a String of VHDLocations to the path of your PowerScale FSLogix Profiles.

Once done, select the top level of the imported hive, and then unload it.

Success! The registry settings are now stored within the VHD Image. Copy the VHD back to the same location within your Azure Stack HCI cluster as you copied it out from. Now when you deploy an AVD session host using this image, it’ll create user profiles using FSLogix on the PowerScale file share, taking advantage of scalable, resilient, high performance external file storage which can be shared across multiple AVD host pools in multiple Azure Stack HCI clusters. Nice.

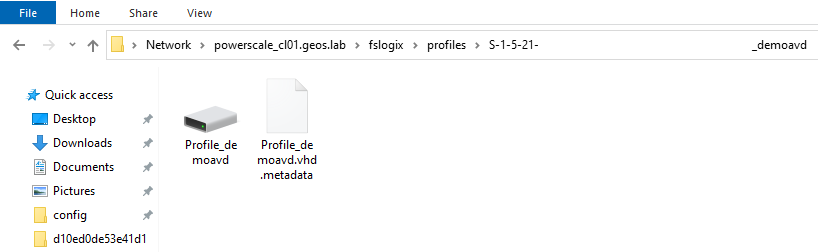

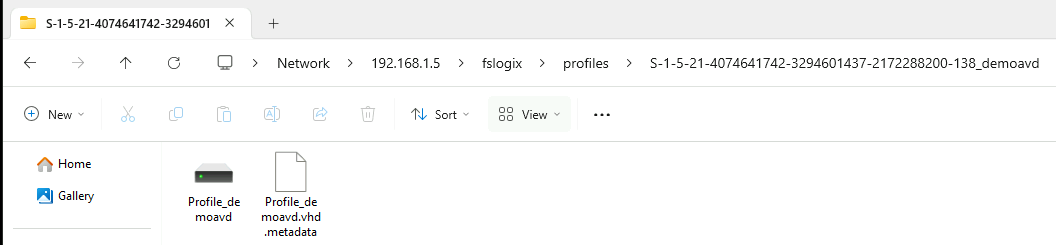

When I login to an AVD session host, my profile is automatically created on the PowerScale file server - FSLogix stores these as VHD files alongside some metadata, in a folder named after the user SID. This is going to be important for what we do next…

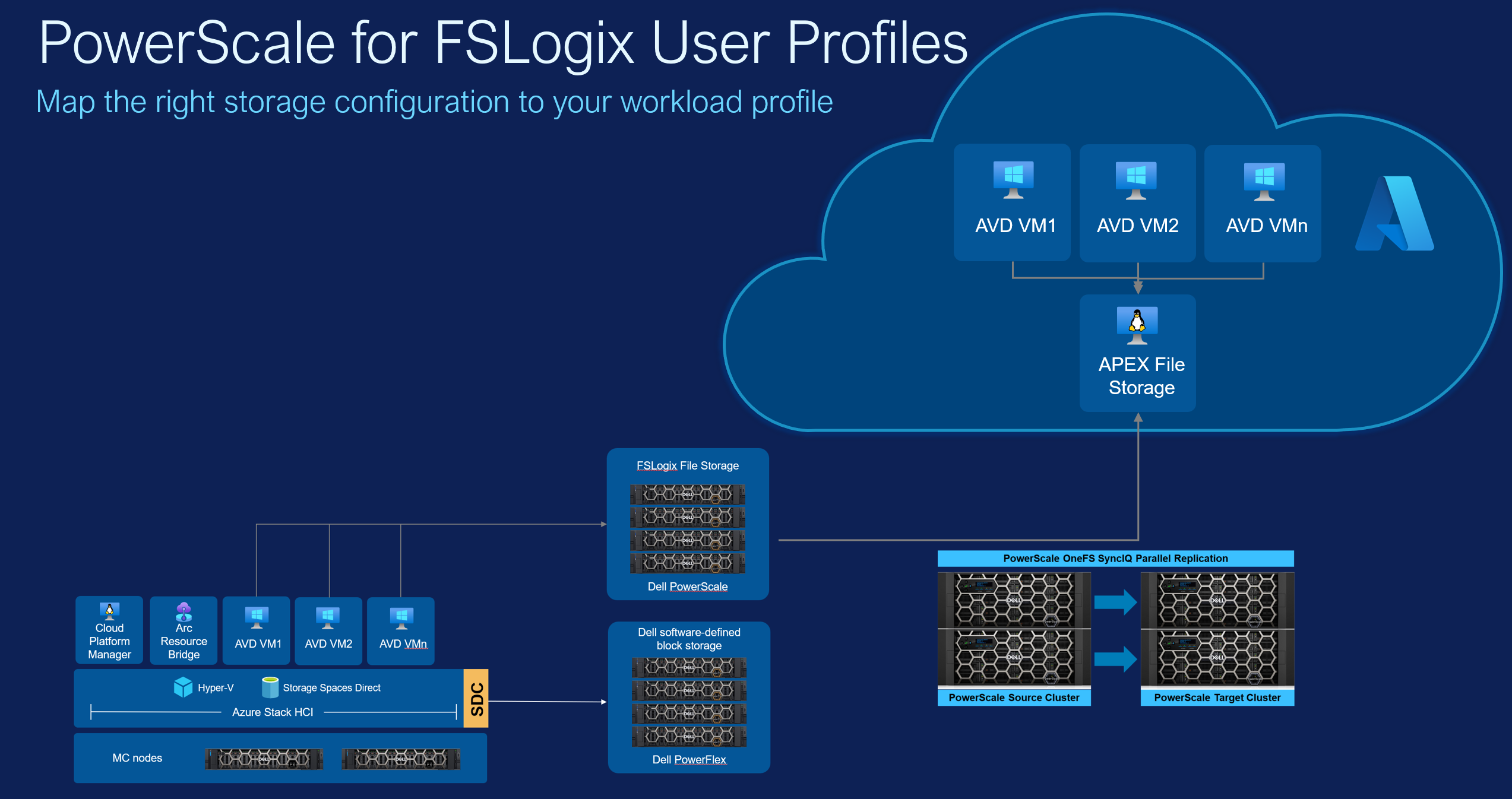

This is only scratching the surface of what we can achieve though - we’re talking hybrid cloud here not just on-premises, and AVD users are rarely just operating within Azure Stack HCI, they’re also operating in Azure Public. How then can we build out this story to be more inclusive and all-encompassing across the full AVD portfolio?

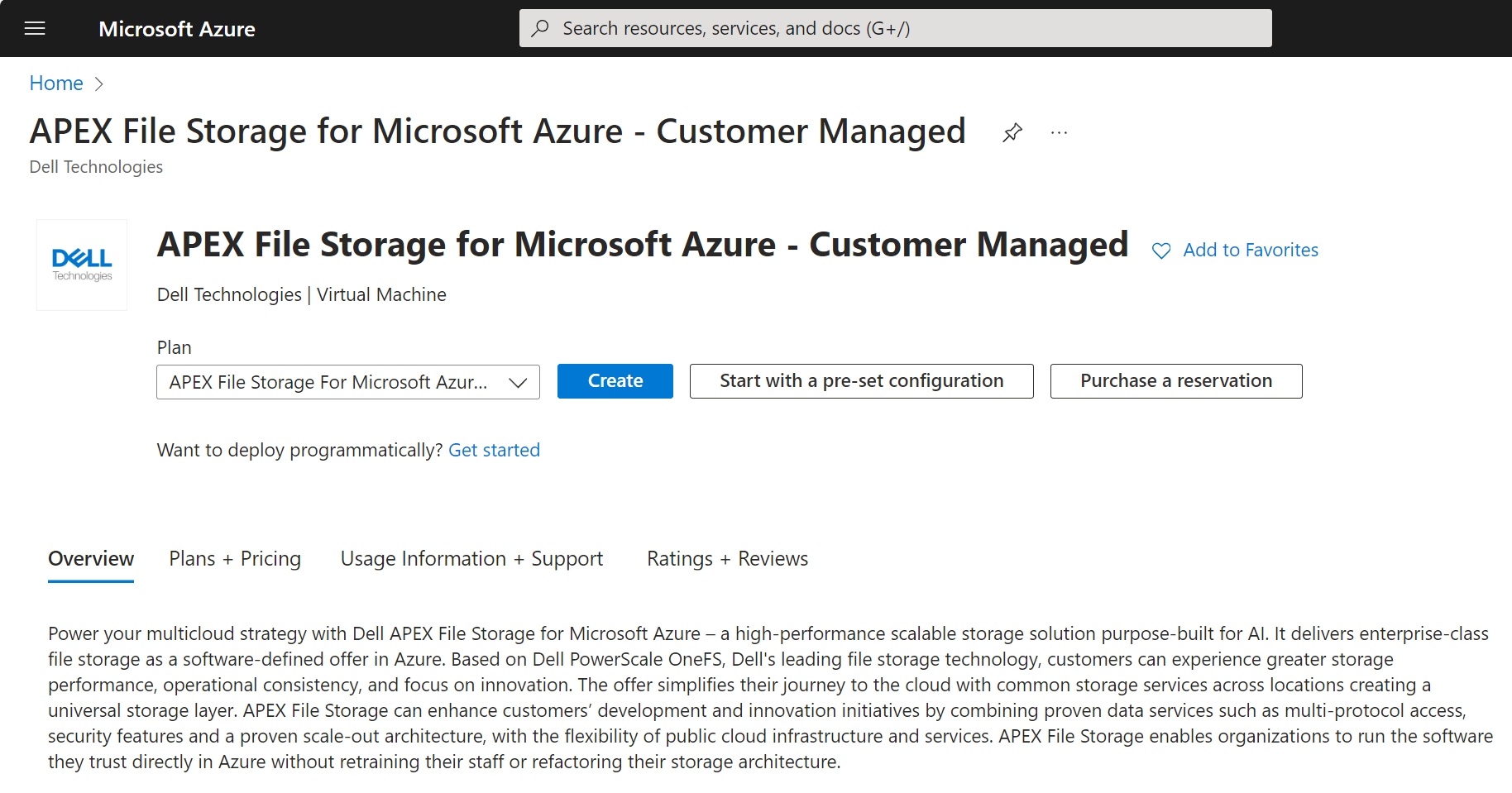

Enter, APEX File Storage for Microsoft Azure.

Dell recently launched APEX File Storage for Microsoft Azure - a new service which uses PowerScale software in Azure virtual machines to provide the same scalable, resilient, and proven OneFS file storage architecture that we’ve enjoyed on-premises for many years, now in the public cloud. I won’t spend this blog delving into the features and benefits, other than to say that if you’ve spent a lot of time with Azure Files, you’re probably already aware of what many of those will be.

Deployment of APEX File Storage for Microsoft Azure is an automated process - today delivered via Terraform, but in the near future via APEX Navigator for Multicloud Storage .

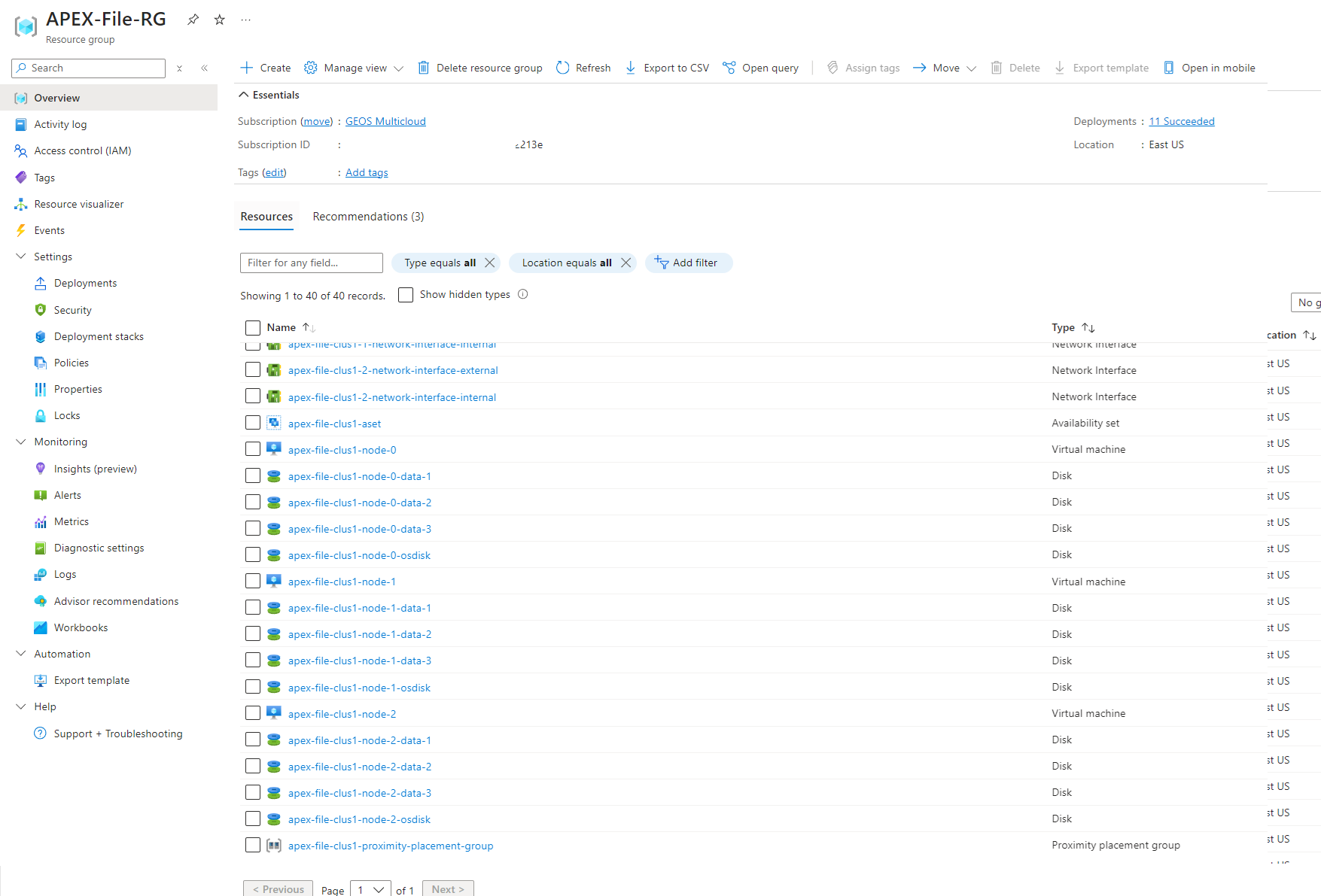

I chose to deploy a three node APEX File cluster, which creates the appropriate resources in an availability set, and ensures they’re collocated in a Proximity Placement Group to ensure low latency between the cluster nodes.

The process from here is very similar to what came before - I set up a file share in my APEX File cluster the same as in my on-premises PowerScale cluster, and then set up my AVD Session Hosts to use that file share to store FSLogix profile containers. For simplicity, I connected my AVD session hosts to the same vnet, but you could of course use vnet to vnet peering or similar if you prefer to have more granular network segregation for workloads.

Now, because AVD on Azure Stack HCI cannot Entra-join today and has to be Active Directory domain joined, and because FSLogix creates profile containers based on user SID, I had a choice to make in how I set up user synchronisation and my Azure domain. I chose to set up a site to site VPN between my on-premises network and the vnet hosting my APEX File and AVD workloads, and extend my Active Directory domain out to Azure. I did experiment with using Entra AD DS as a managed service, and while an Entra sync from AD does propagate users, groups, and credentials to the Entra AD DS instance, the user SIDs came through differently, even when I tried to set them to propagate through as the same. I don’t think there’s any way to change this, but if you know differently, let me know.

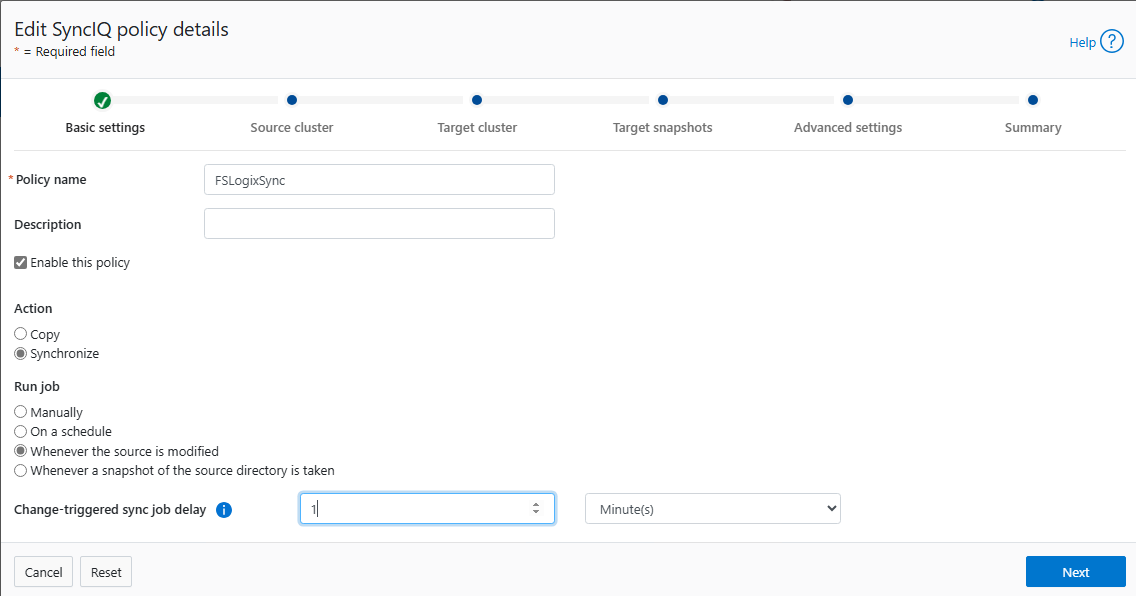

Anyway, with all of this in place I was also able to set up SyncIQ to enable replication of on-premises file data from PowerScale into the APEX File Storage instance in Azure. This is where things get really cool.

I set my SyncIQ policy to run whenever a source file is modified, for FSLogix this is the VHD which holds user profile data being written to/updated. For FSLogix, I believe this equates to when a user session ends and the VHD container is unmounted from their Session Host.

Now that SyncIQ is set up, my user profile data is kept in sync between PowerScale on-premises and APEX File Storage in Microsoft Azure. If I login to a session host running in either Azure Stack HCI or in Azure, my user profile is available to me immediately and up to date. I am excited to have Entra join enabled for Azure Stack HCI AVD Session Hosts in the future, to remove the dependency on extending AD into Azure to ensure the user SID remains the same.

This enables a best of all worlds scenario, where user profiles roam to the location the user wants to connect to - maybe a user is out travelling and the latency to an Azure DC is lower than to the Azure Stack HCI instance. Maybe we’re in a disaster scenario and have failed over to using Azure due to unavailability of Azure Stack HCI. Maybe a user has some workload requirements that they use Azure for (for example temporary GPU access), and some that they use Azure Stack HCI for (e.g. continuous high performance storage). Regardless of the scenario, we can now keep user profiles in sync between Azure Stack HCI and Azure, using a file storage technology that huge numbers of customers have already invested into on-premises, extended now to Azure. Nice.