Having established in our previous blog that from the perspective of Azure and Azure Arc, there are multiple different definitions for different server types that may or may not be in some way Arc-enabled, and furthermore having established that Azure Stack HCI VMs are their own unique subset of Arc-enabled servers, this brings us neatly into the super important question - how then do I migrate my workloads into Azure Stack HCI 23H2, and have them correctly enabled as Azure Stack HCI VMs?

In years gone by, there were established patterns for moving from most any platform into Hyper-V environments, whether using SCVMM, Starwinds, Carbonite, Veeam, Commvault, or myriad other approaches. The challenge we have now though is that while each of those established tools can still migrate workloads into Azure Stack HCI, they will be relegated to being simple Hyper-V virtual machines, without the myriad management, cost, policy, and feature benefits that you’ve bought into with Azure Stack HCI. You can of course deploy the Arc connected machine agent on those migrated VMs and connect them to Azure, but again they’re not Azure Stack HCI VMs, they’re merely Arc-enabled Servers, lacking again the bulk of the benefit ostentibly conferred by the underlying platform.

To address this challenge, Microsoft have been hard at work enhancing the Azure Migrate service - adding two new features to enable migration of workloads into Azure Stack HCI as Azure Stack HCI VMs. These are Azure Migrate based migration for Hyper-V based workloads to Azure Stack HCI, and Azure Migrate based migration for VMware based workloads. The former is currently in public preview , while the latter is currently in private preview.

You might be tempted to think that the ability to migrate from VMware to Azure Stack HCI is the more critical capability to bring to market, but in my opinion being able to migrate from Hyper-V is actually far more important, and hopefully by the end of this article you’ll be of a similar opinion.

As a starting point, let’s assume that your current workloads are heterogeneous - they’re running in multiple different ecosystems or environments. This becomes more and more likely the larger an organisation is. Maybe you have some running in VMware, there’s a Nutanix AHV cluster running some workloads, and you have some bare metal SQL servers. How then do you migrate all of these myriad workloads to Azure Stack HCI? Once Azure Migrate for VMware to Azure Stack HCI is generally available you’ll be able to use that to move the VMware based workloads, but what about the AHV, what about the bare metal instances? There’s a school of thought that says your best option is to rebuild them, and in general I’d lean in that direction, but the reality is that it’s not always possible to follow the technically best path.

The good news is that all of the options I mentioned previously - Veeam, Carbonite, Commvault et al - will still work as they always have to get the workloads moved into your Azure Stack HCI cluster as a basic Hyper-V virtual machine. Hurrah! Our task then is to convert the Hyper-V VM into an Azure Stack HCI VM, and that’s the purpose of this blog.

Before going any further, I need to note that migration from one platform to another requires a lot of planning and preparation, and the below is not intended to diminish or misrepresent all of the pre-planning that needs to go into figuring out elements like networking on the new platform, configuring storage, ensuring security standards are met, rewriting internal procedural documentation, and a whole host more activities. This blog really focuses on the latter part of a migration exercise, once you’ve done the data move between platforms, but can’t take advantage of all of the Azure Stack HCI Arc VM goodies.

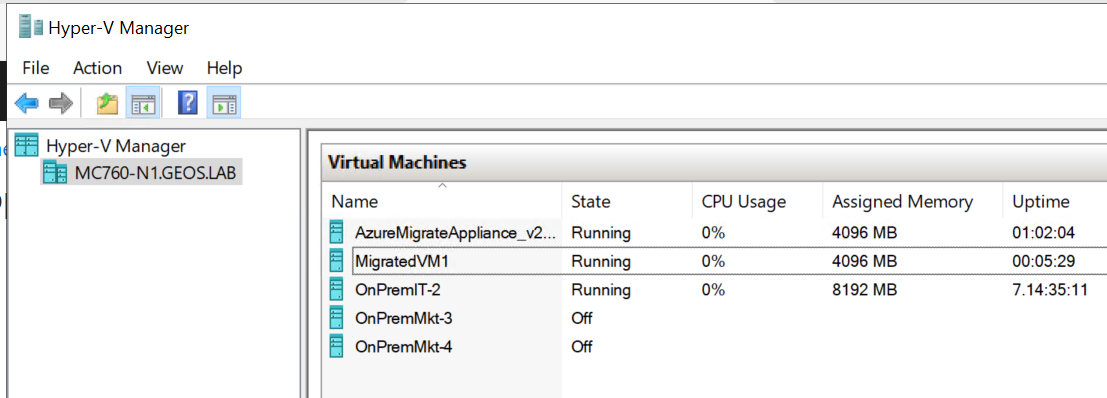

We’ll start then from the assumption that you have already migrated a VM from (let’s say) Nutanix into your Azure Stack HCI cluster. This VM, imaginatively named ‘MigratedVM1’, will then be visible in Hyper-V Manager, Failover Cluster Manager, or Windows Admin Center as a standard virtual machine.

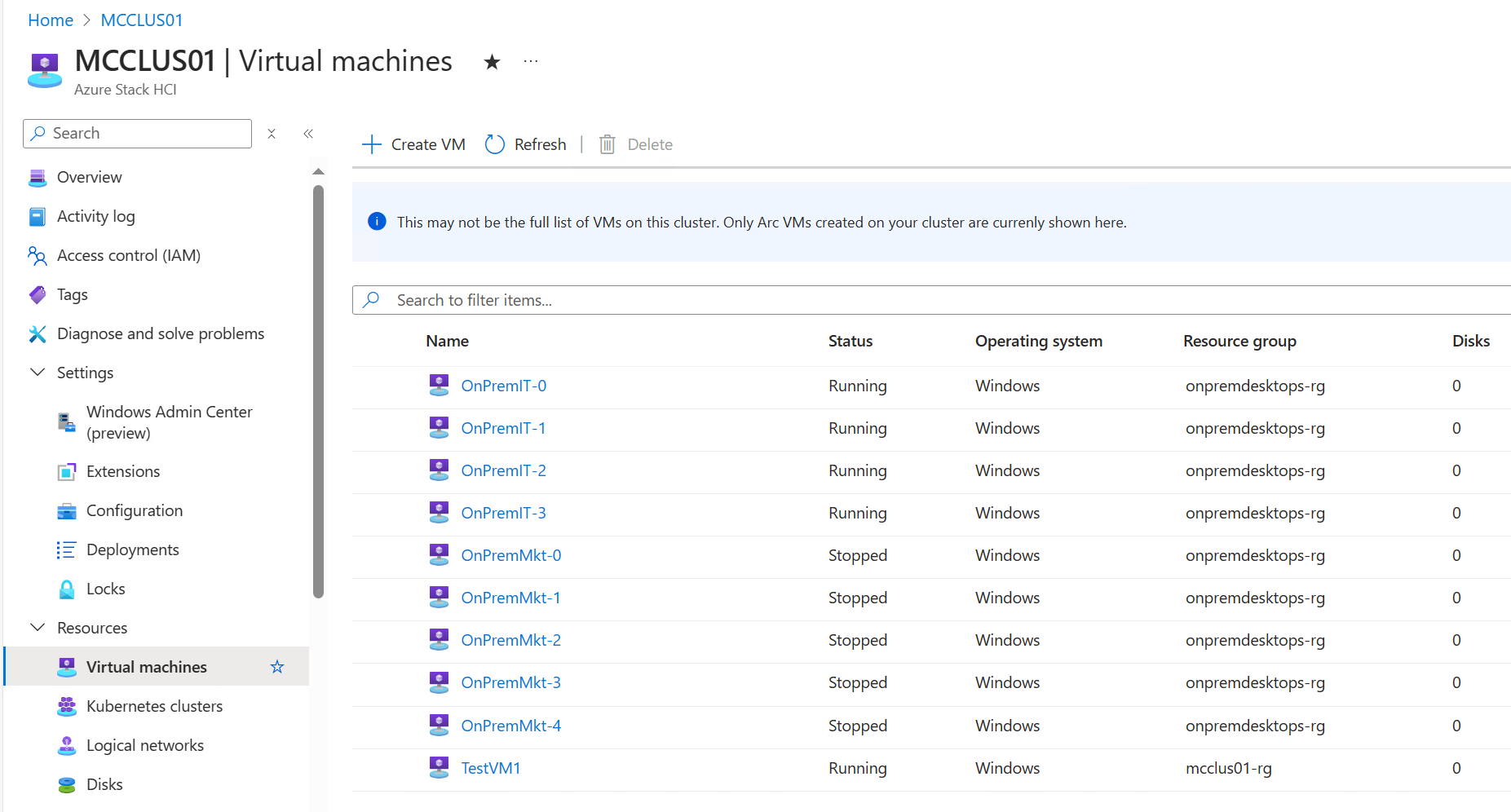

It will not, however, be visible from the Azure Stack HCI VM management blade inside the Azure Portal, as seen below:

This is where our friend Azure Migrate for Hyper-V to Azure Stack HCI comes into play. Because our Azure Stack HCI solution is built on Hyper-V, we can actually make use of Azure Migrate, set the Azure Stack HCI cluster as both the source and the target destination, and by passing the migrated VM through the Azure Migrate service, we can convert it to a true Azure Stack HCI VM all within the bounds of the same cluster.

This isn’t going to be a step by step, blow by blow account of how to set up and use Azure Migrate, that is a well documented path - the intent here so to go through the points of interest from the perspective of performing a migration within the same cluster.

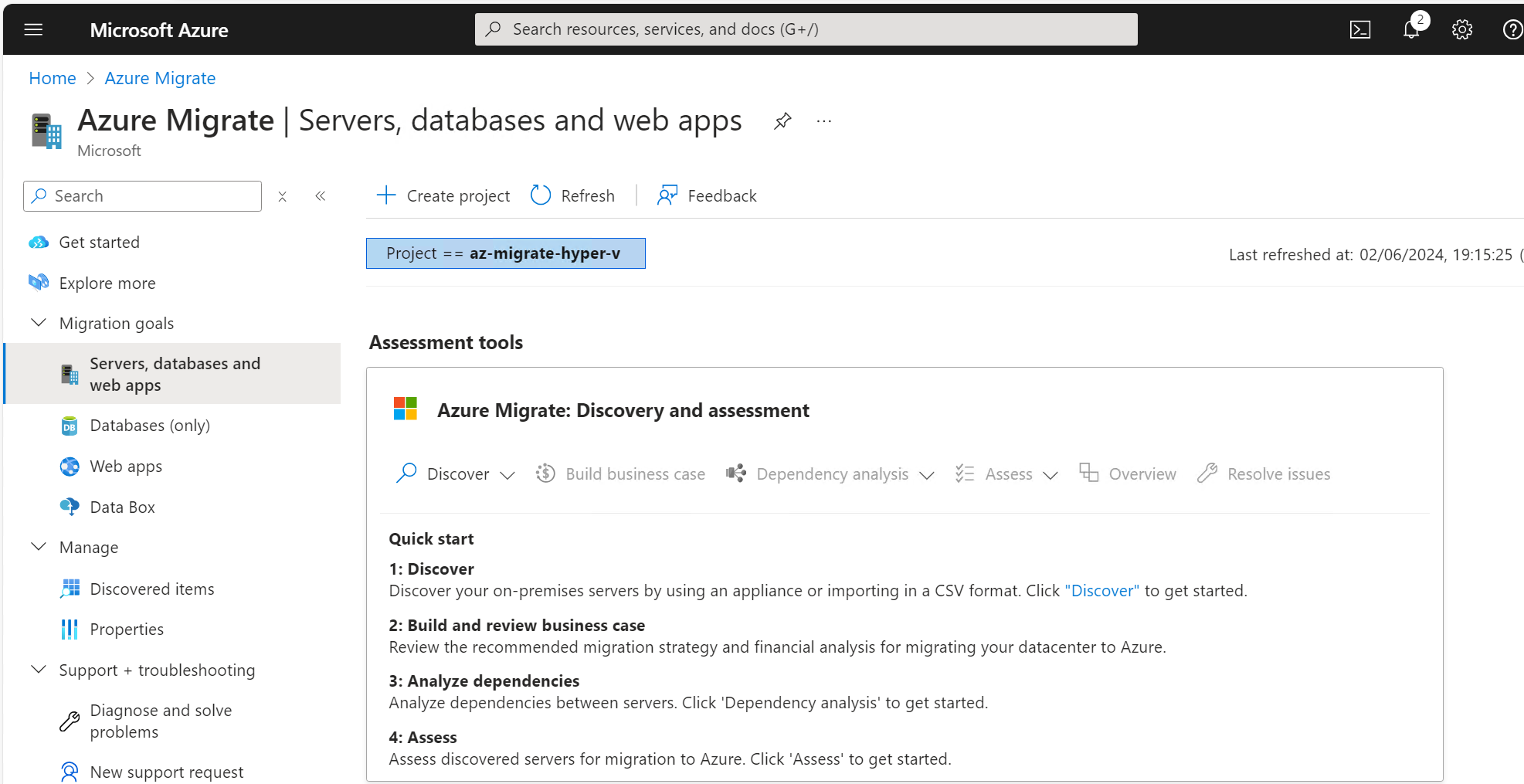

We start off as usual by creating an Azure Migrate project - in this case az-migrate-hyper-v.

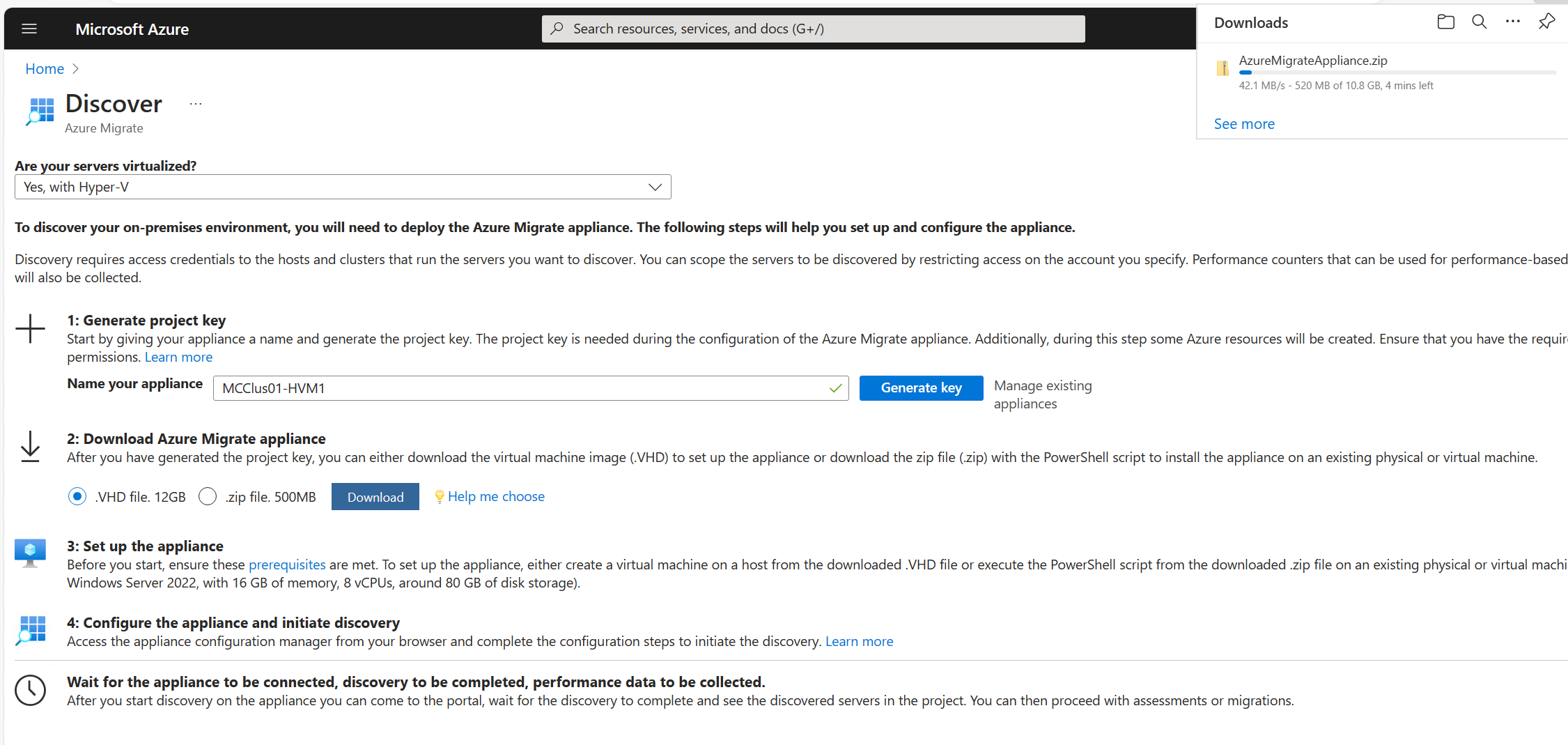

There are two appliances that need to be deployed in order to perform an Azure Migrate operation to Azure Stack HCI. The first will run on the source cluster, the second on the destination. In our case both clusters are the same cluster. In order to deploy the first appliance in our Azure Stack HCI system, download the source appliance VHD.

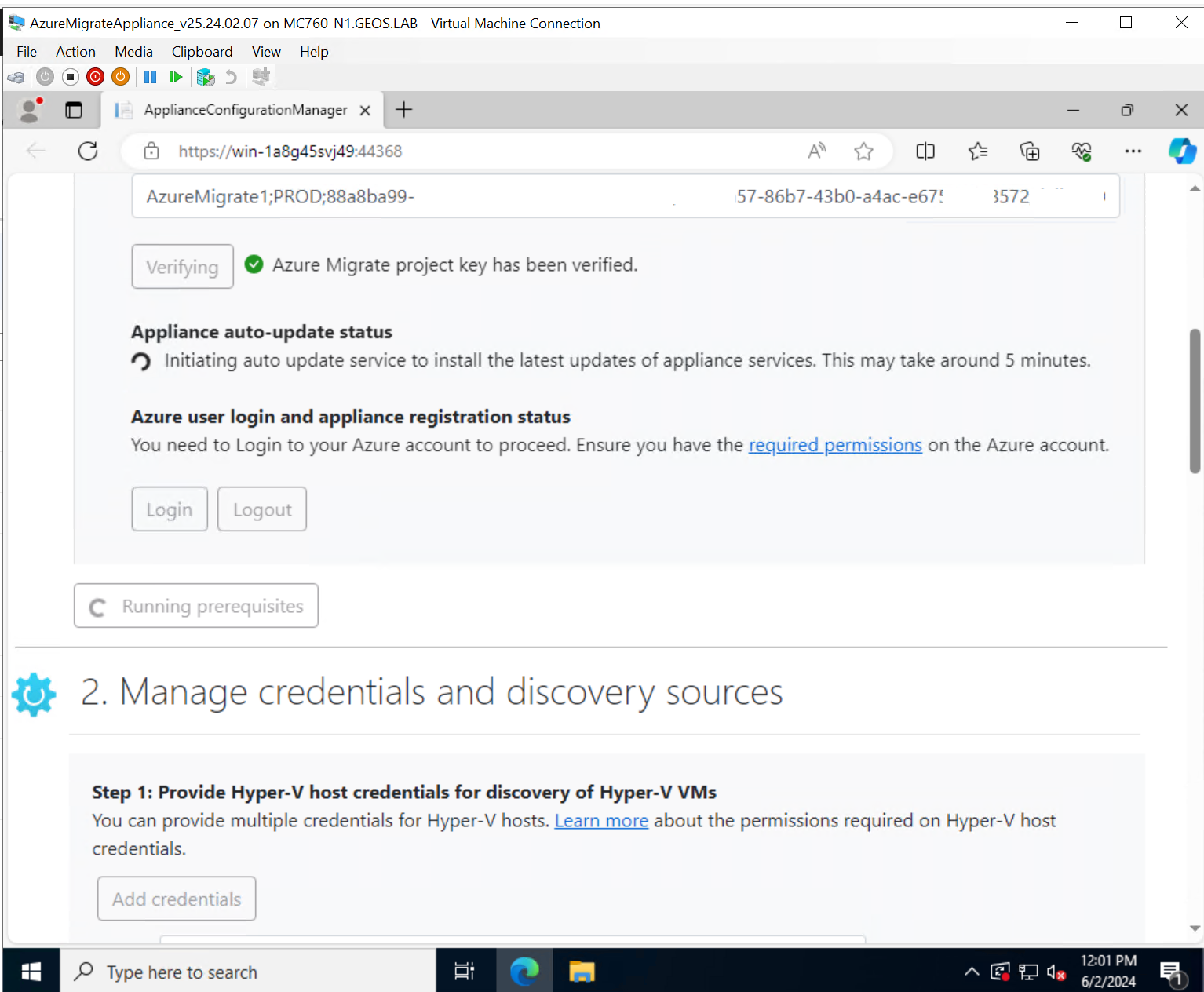

The appliance is deployed by importing it into Hyper-V Manager. Once it’s deployed and you login, the Azure Migrate service will start and fire up a wizard in a web browser. Walk through this as normal, adding the key generated in the Azure Migrate project and performing any updates.

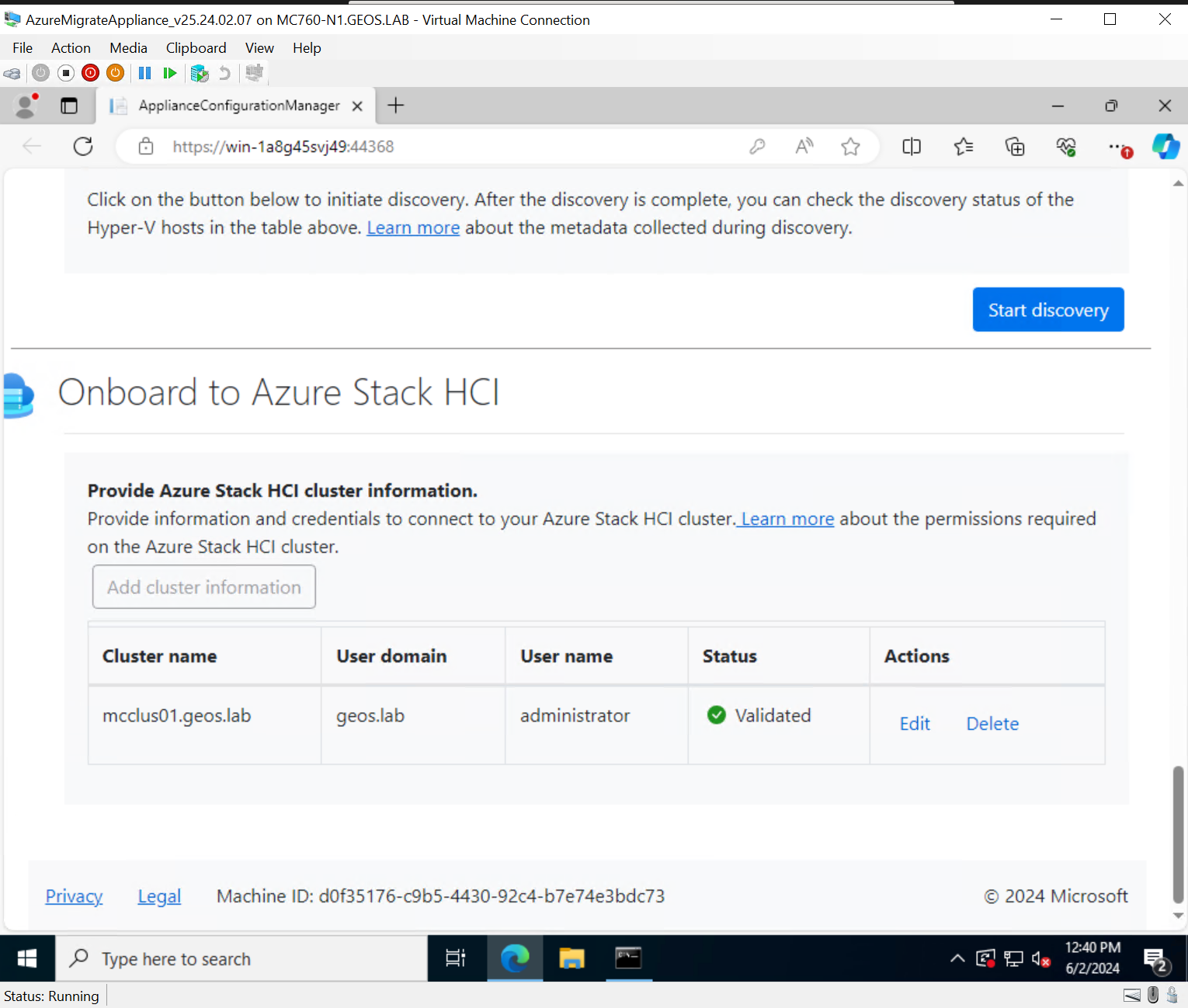

The first point of difference from a normal Azure Migrate project comes at the bottom of the source appliance configuration wizard. Now we find an option to Onboard to Azure Stack HCI. By inputting Azure Stack HCI cluster information here, the appliance knows that you want to migrate workloads to an Azure Stack HCI environment, rather than the default Azure environment.

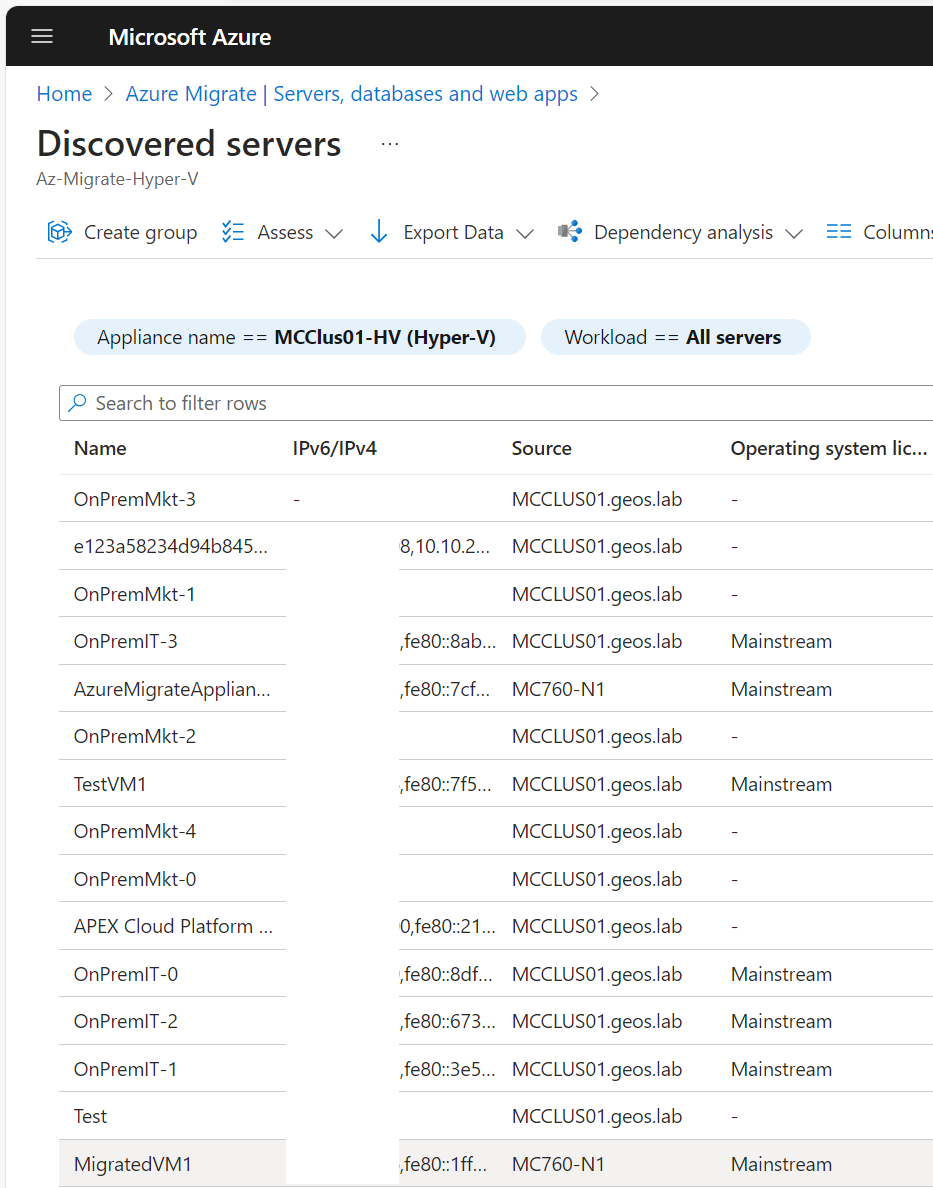

The appliance will initiate discovery, and in short order discover all of the VMs running on your Azure Stack HCI cluster. Note that there are a number here that weren’t showing up in the Azure portal in a previous screenshot - the Arc Resource Bridge, the APEX Cloud Platform Manager, and MigratedVM1 - none of these are Azure Stack HCI VMs. That said, because the latter two are intended to be black boxed appliances running inside the cluster providing up-stack integration to Azure and down-stack integration to the physical infrastructure and wider Dell ecosystem respectively, they don’t need to be. Note also that MigratedVM1’s source is showing as MC760-N1 rather than MCCLUS01.geos.lab. This is because I hadn’t created the appliance as a clustered role, I later rectified this, but didn’t grab a new screenshot. Oh well.

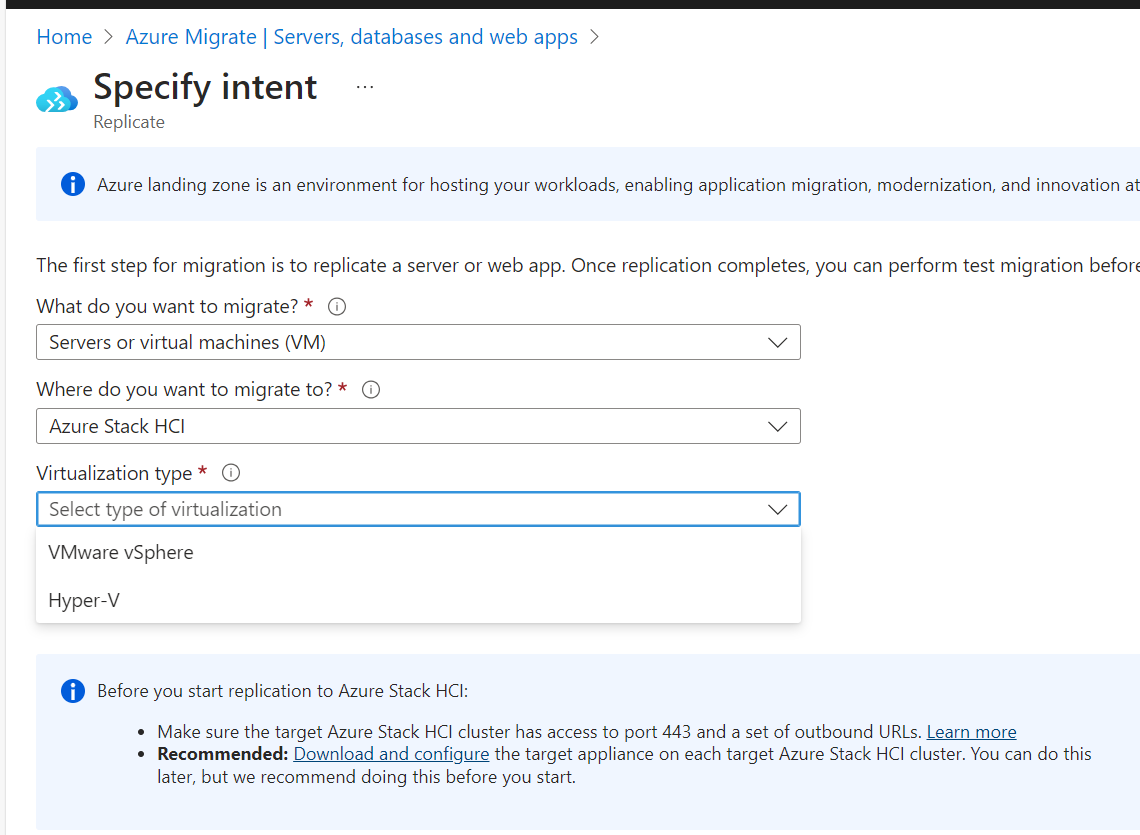

Now as we progress through the standard Azure Migrate process of setting up VM replication from the Azure portal, we’re proffered a new migration option - now as well as having the option to migrate as an Azure VM, we can migrate to Azure Stack HCI. Note that while both VMware vSphere and Hyper-V are presented as options, only Hyper-V is available to use today while VMware migration is in private preview.

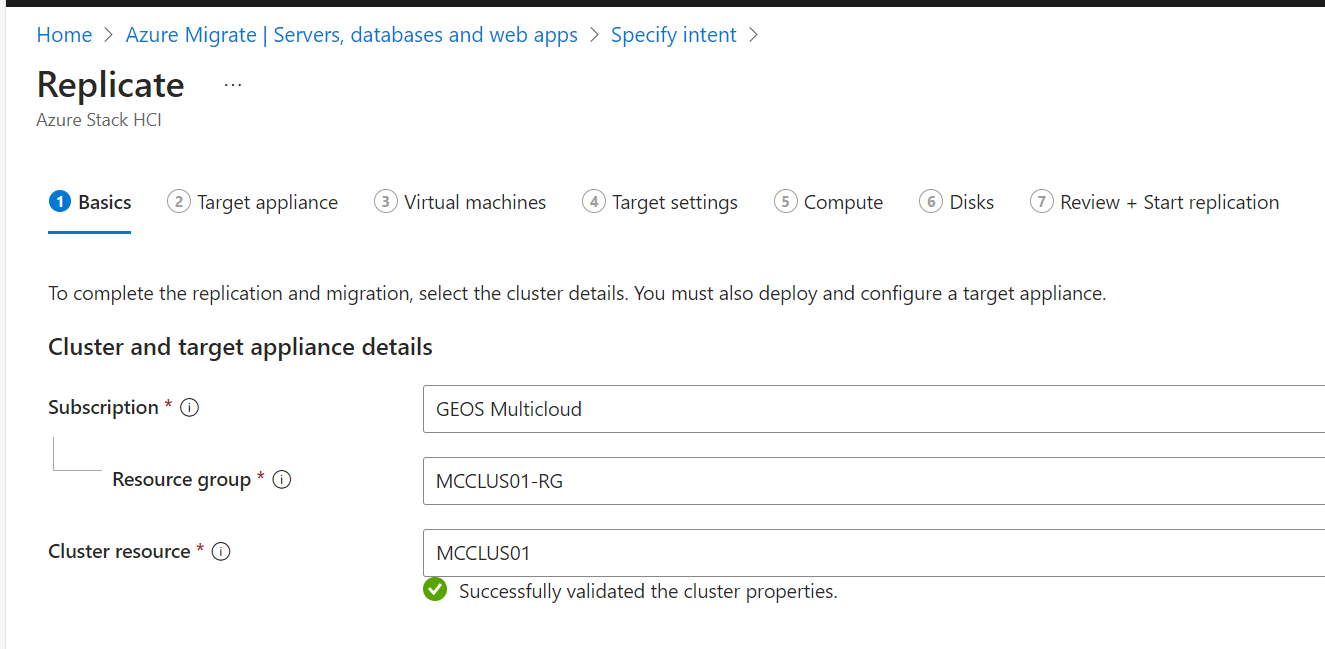

In the next step, we find that the typical Azure Migrate experience has changed to accommodate the requirements for Azure Stack HCI. Step one is to choose the cluster resource to migrate workloads to - this is the ARM representation of your Azure Stack HCI cluster, in this case MCCLUS01.

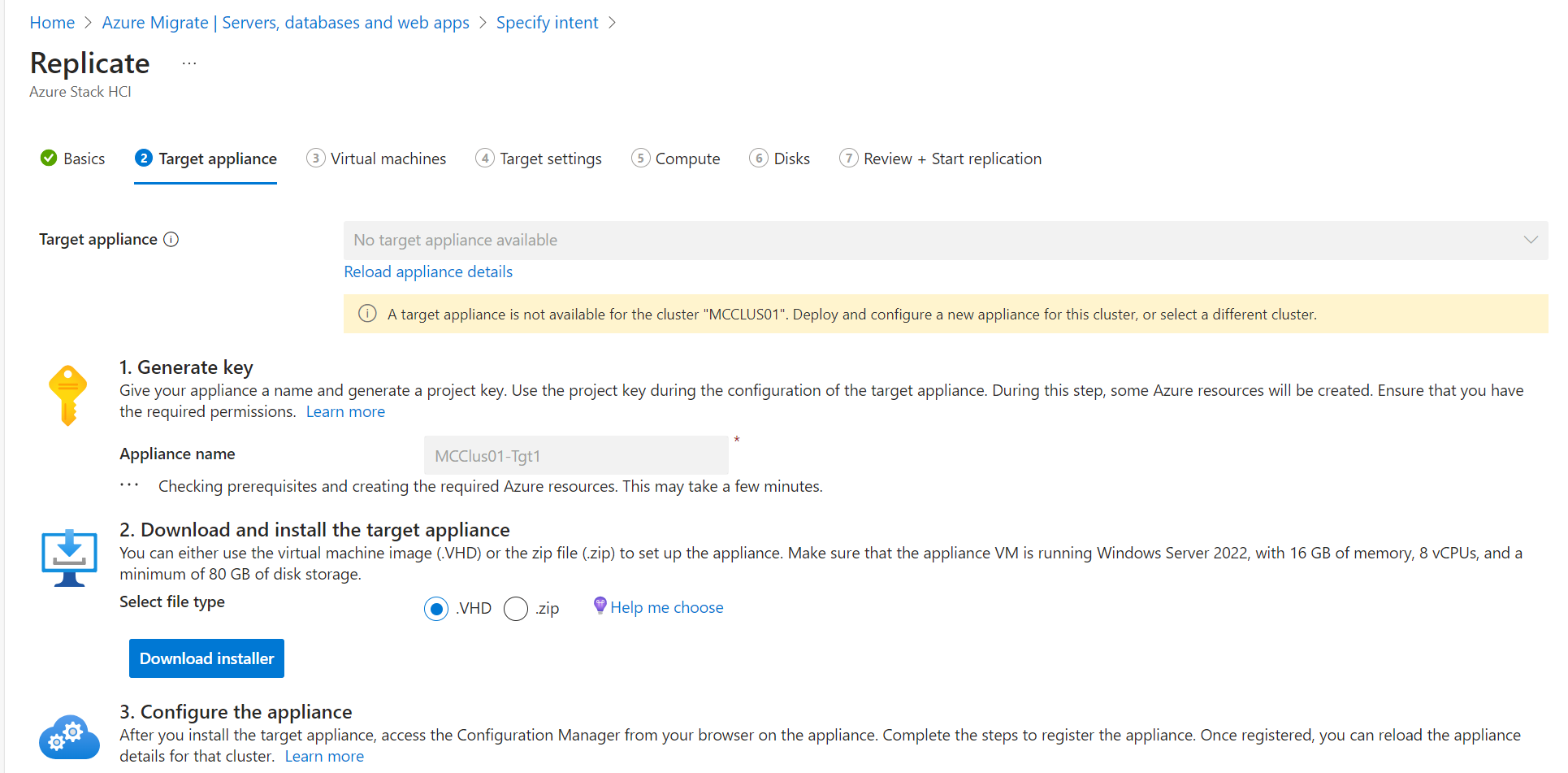

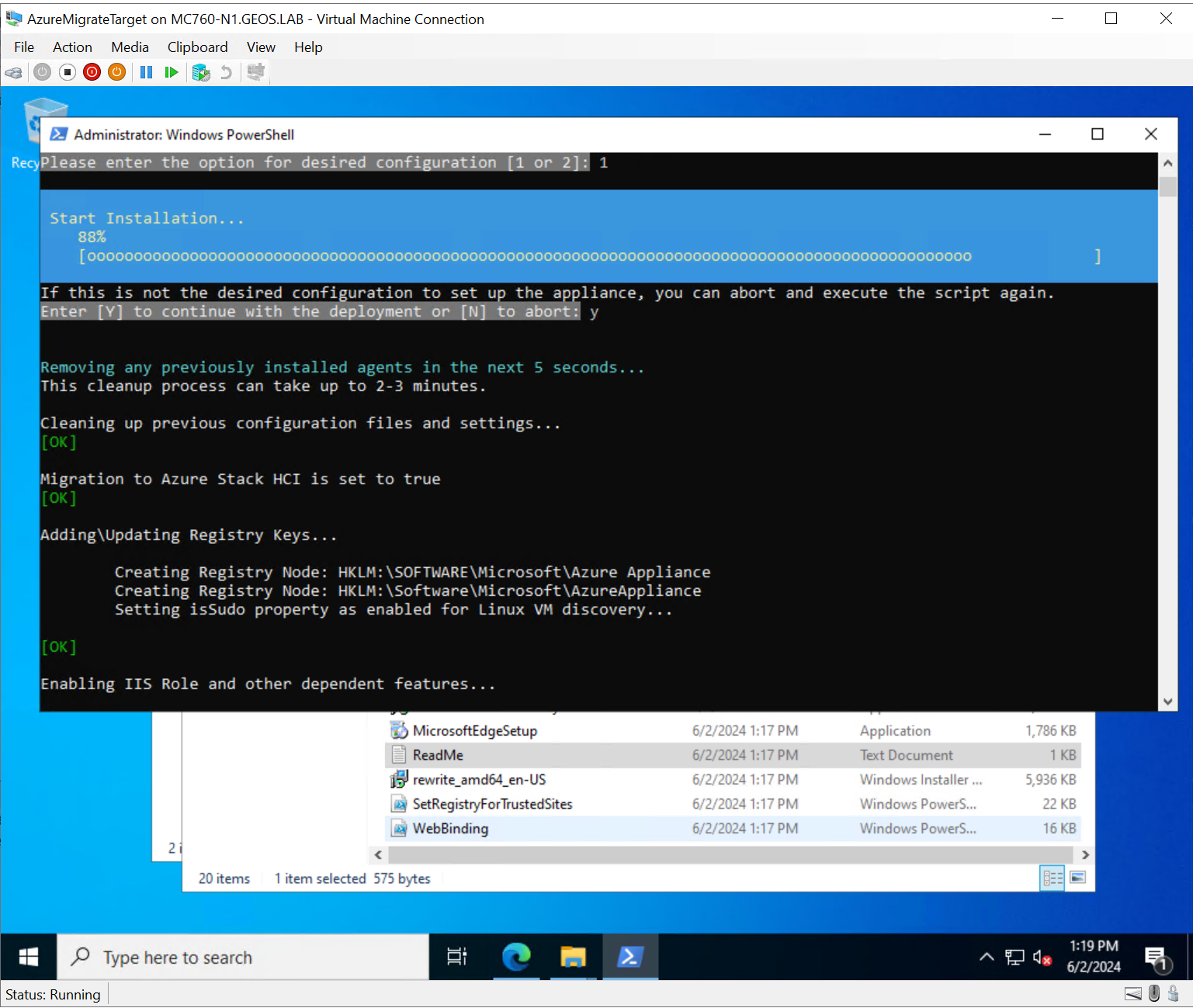

The next step is very similar to the step where we downloaded the source appliance, except here we need to download the target appliance bits. Here we’re offered the option to download as either VHD or .zip, my experience is that each have the same contents - a number of scripts that you need to apply to a VM that you create manually - so just download the zip. After downloading the zip, you need to create a VM which will act as the target appliance - this just needs to be a Windows Server 2022 VM with 16GB RAM, 8vCPUs, and 80+GB of storage.

After creating your target appliance VM, you can copy the contents of the zip file into it, and from an elevated PowerShell prompt, run the AzureMigrateInstaller.ps1 script. It’ll use all of the other included scripts and installers to automatedly set up the target appliance, and in short order it’s done.

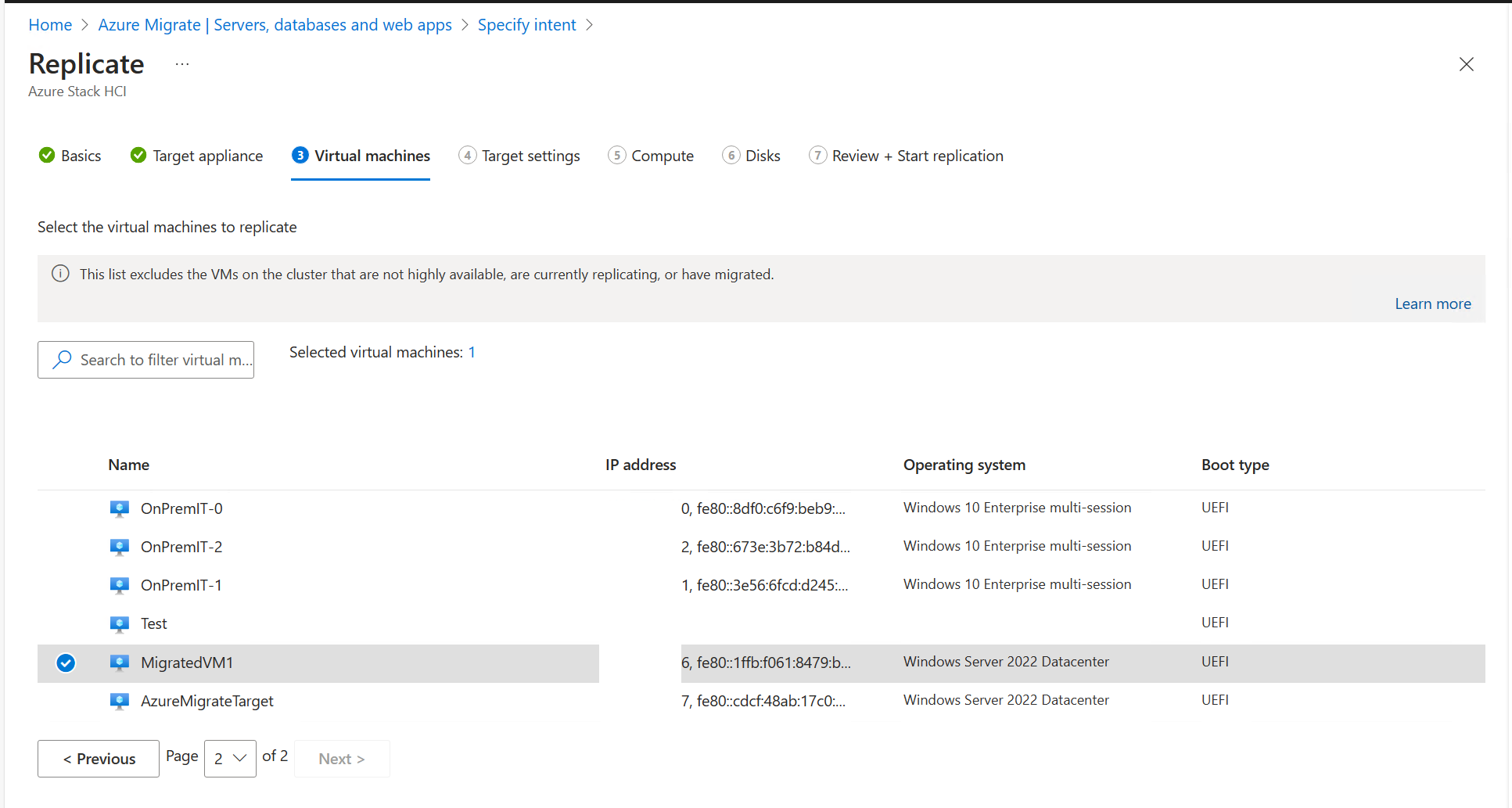

Once your target appliance is deployed and all updates applied, you can return to the Azure Migrate project in Azure, and progress to the next step in the process - selecting the Virtual Machines that you want to migrate (or in our case, more accurately convert). I will of course, select the MigratedVM1 as the sole VM to replicate.

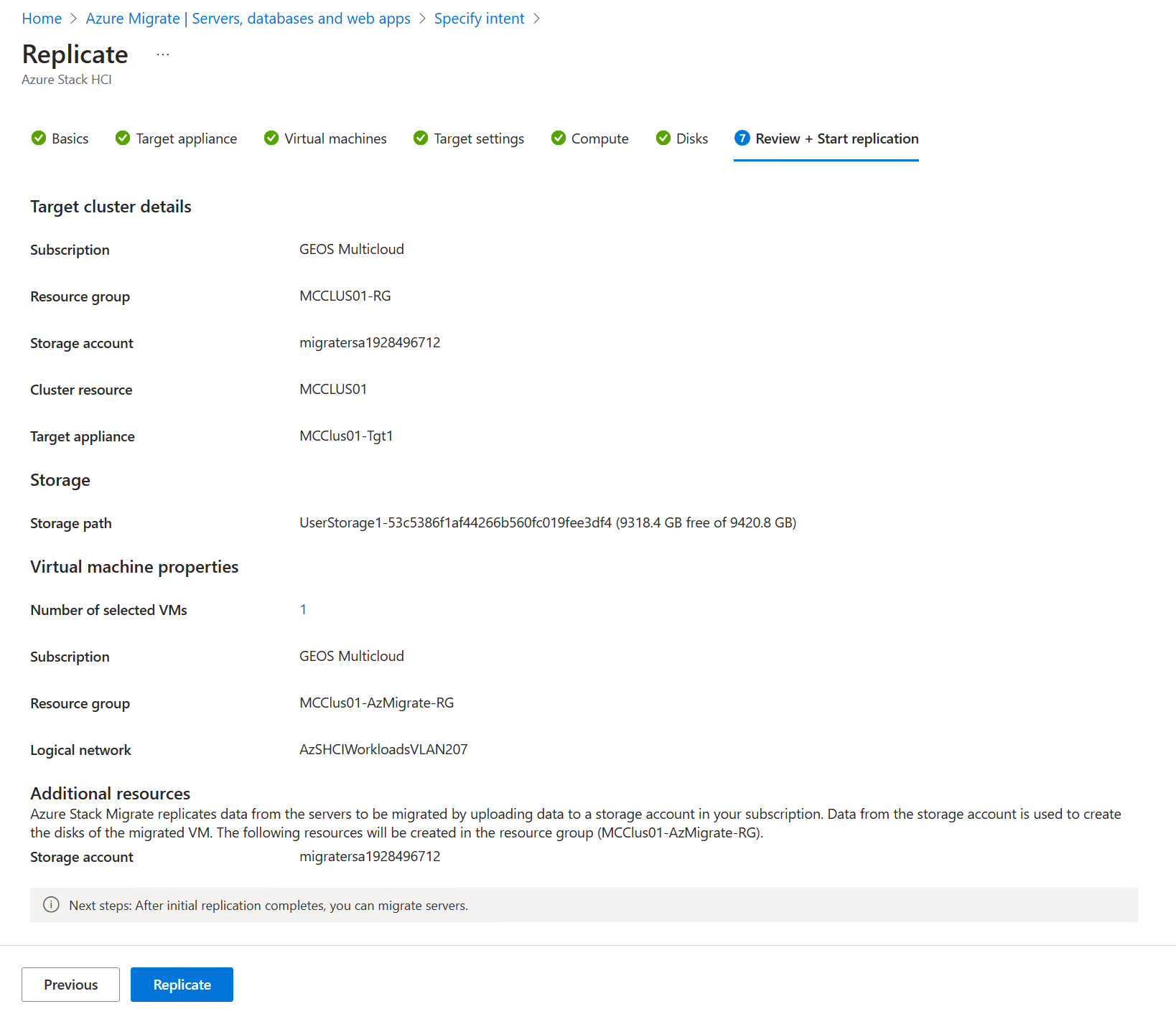

The following few pages of settings are straightforward enough that we don’t need to belabour them - choose a resource group for the target VM ARM resource to be created in, choose compute settings (CPU, RAM), and ensure disk settings are correct. With that, we can initiate the initial replication.

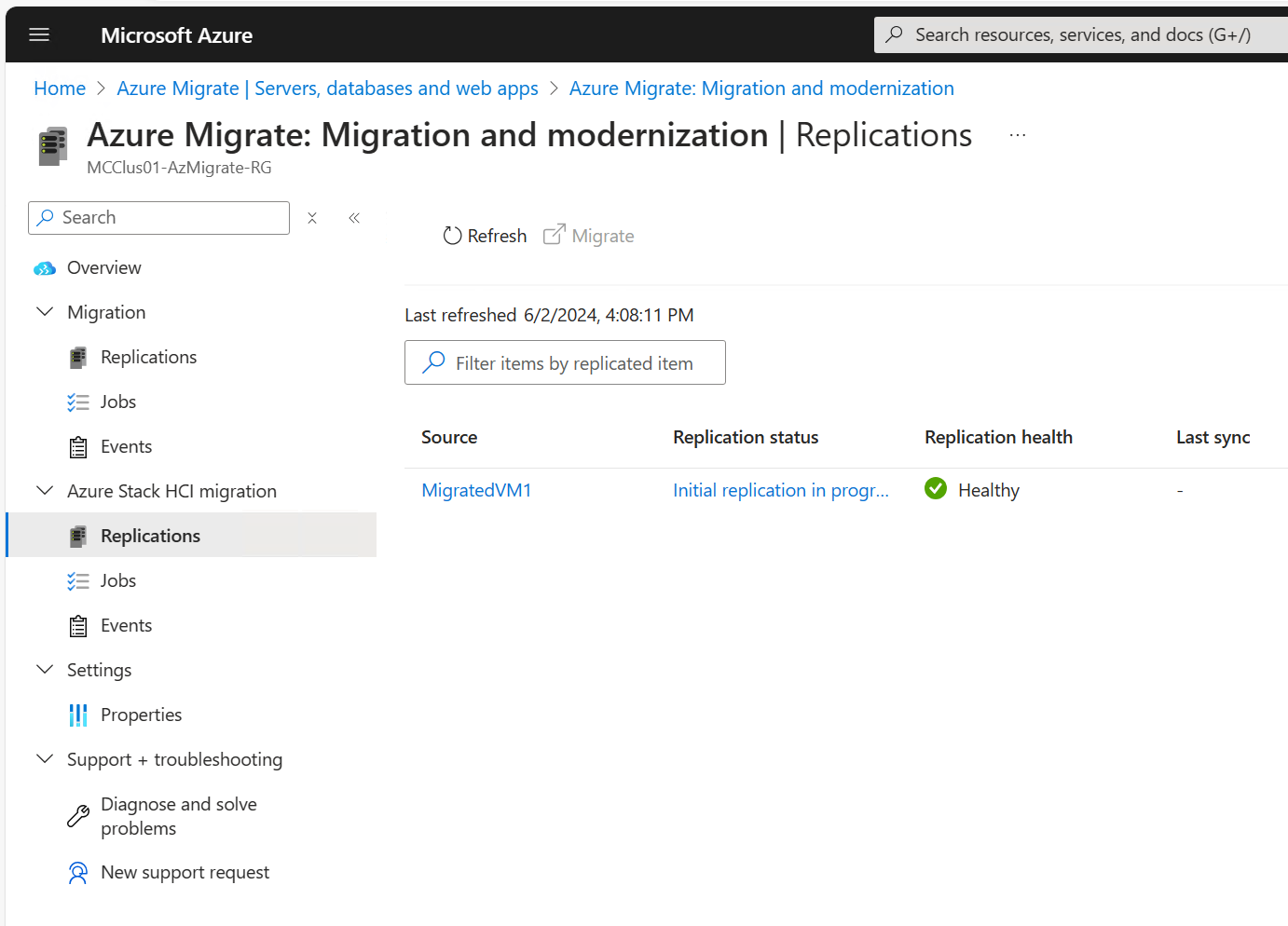

After starting initial replication, we can navigate to the usual Azure Migrate overview page which will show us ongoing Replications, Jobs, and Events, except now you’ll see down the left hand side that there’s a whole separate section for monitoring Azure Stack HCI migration events. In the Replications pane, we can see that the initial replication has started for MigratedVM1.

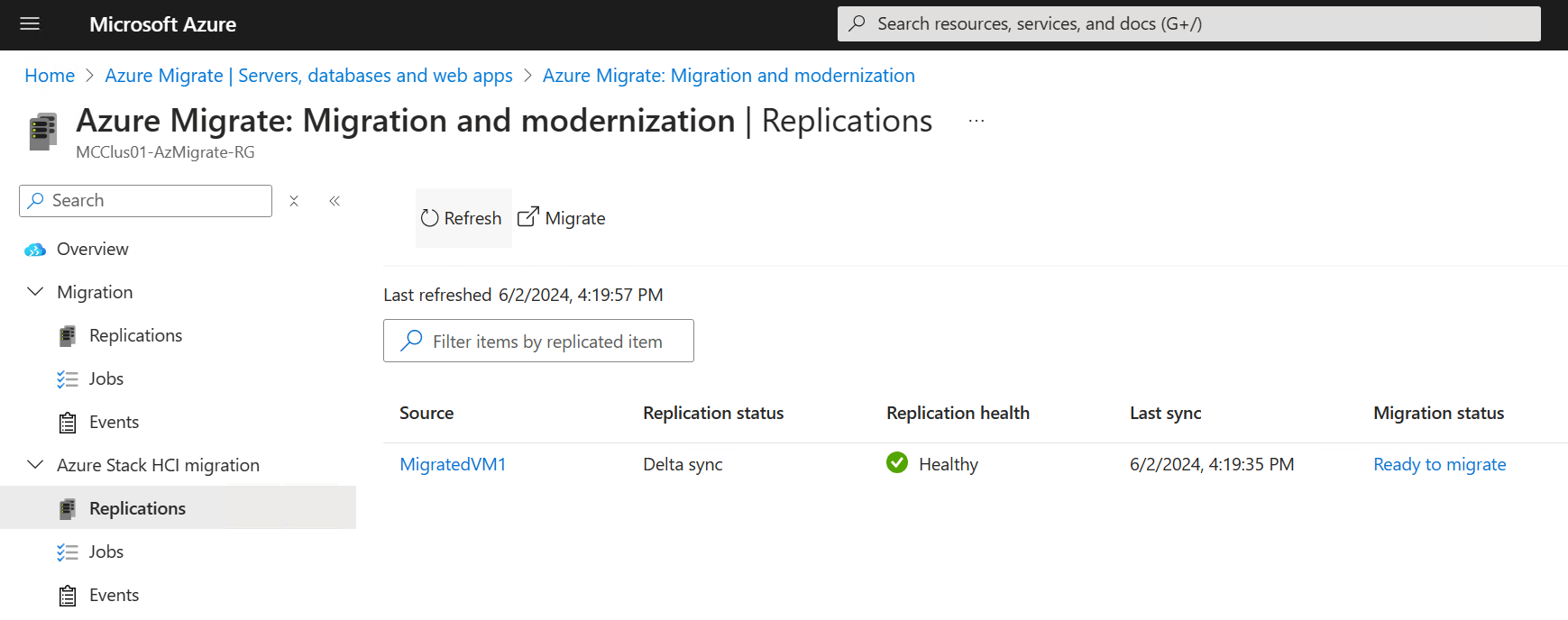

Once the initial replication has completed, the Azure Migrate source and target appliances will keep the (powered off) VM at the destination and the (powered on) source VM in sync through a delta sync.

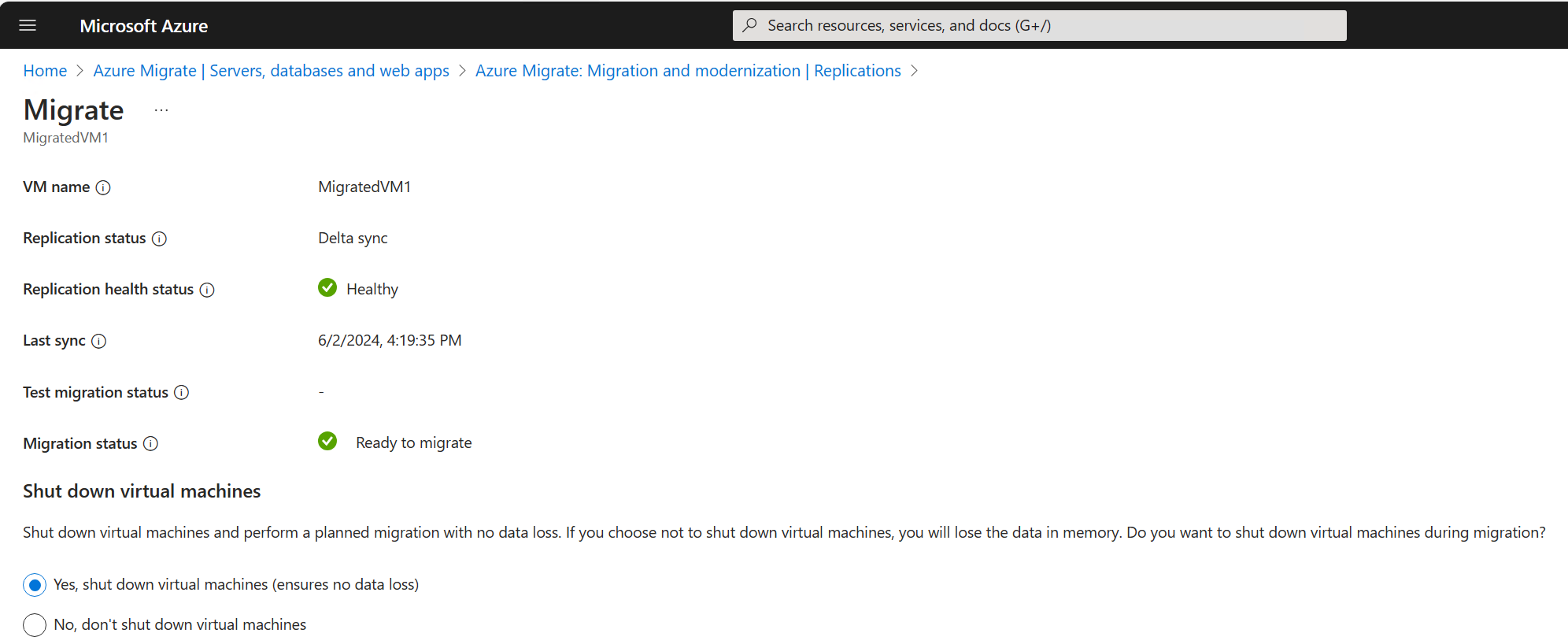

With replication completed and the source and target remaining in sync, we can initiate replication, which prompts us whether we want to shut down the source VM, or leave it running. In this case we definitely want to shut down the source, as we’re migrating within the same cluster. This also ensures no delta syncs are missed in the period of replication, so is best practice from a DLP perspective as well.

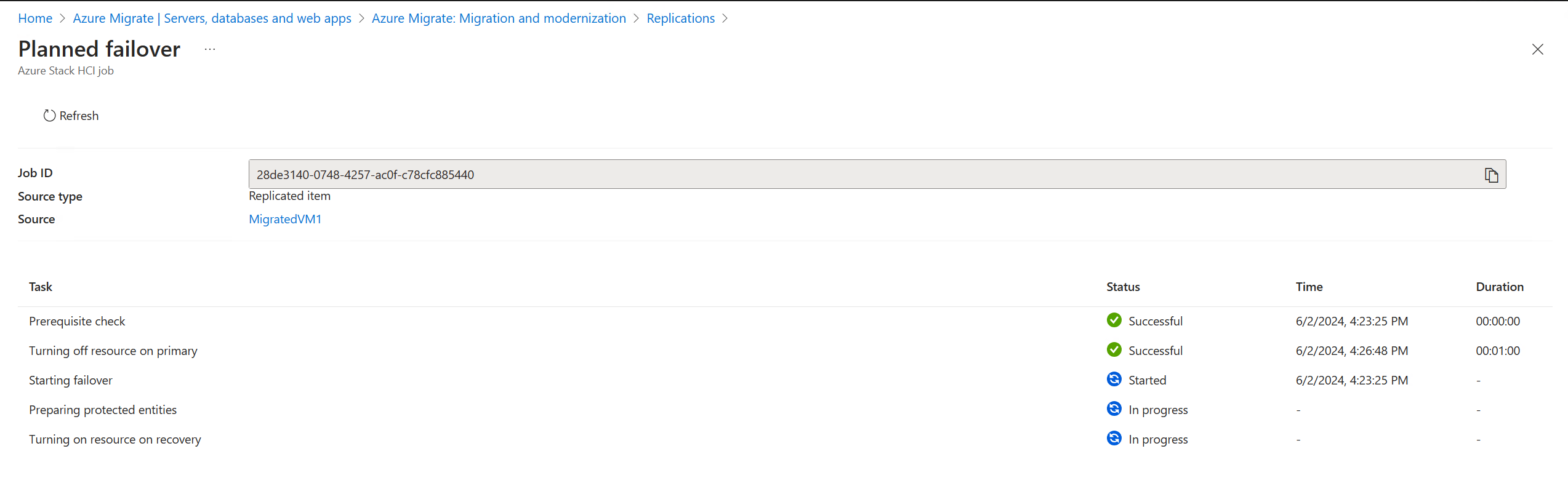

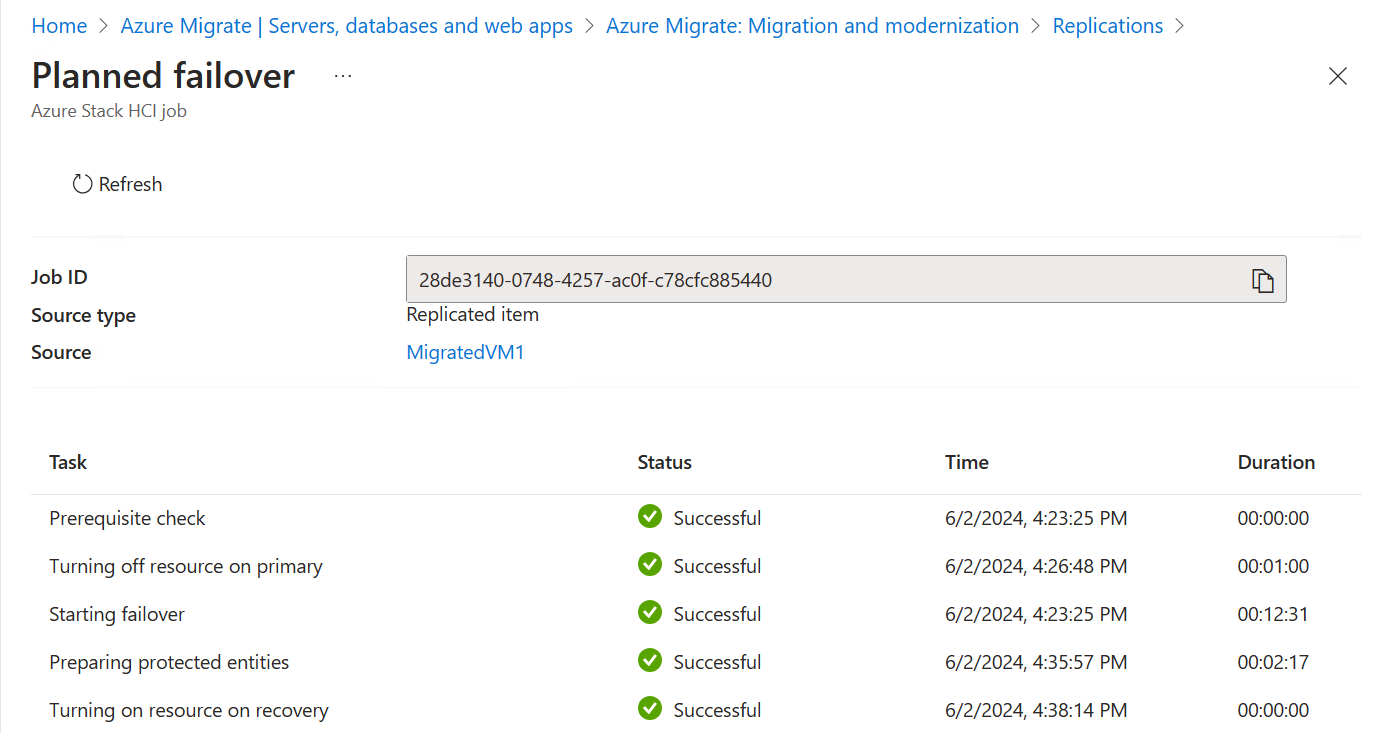

The failover activity will start, and you can monitor as it progresses.

In my case, the entire process took around 15 minutes to complete.

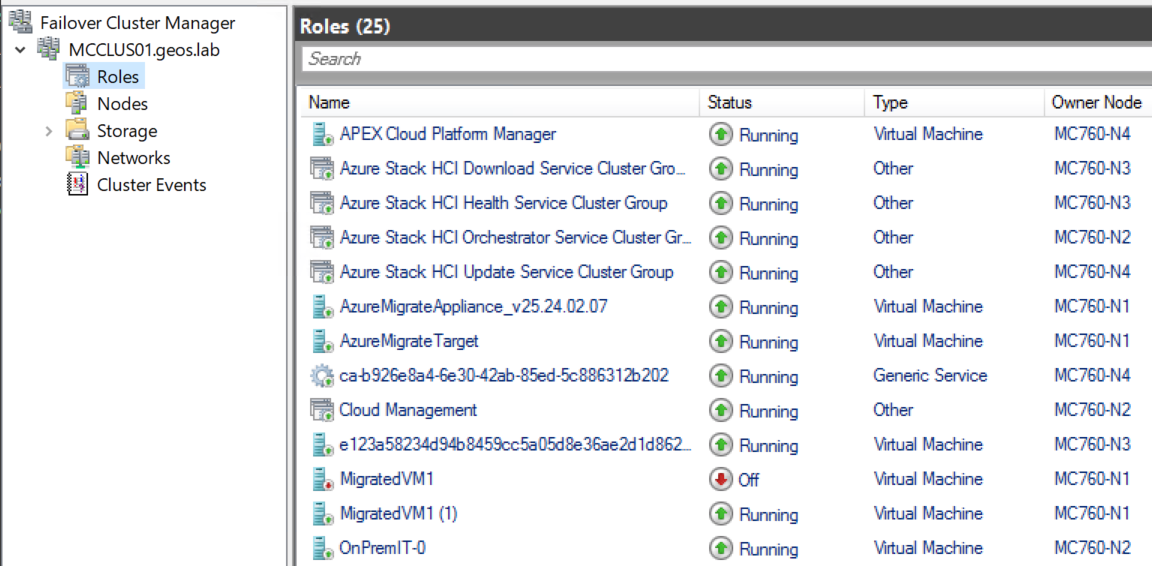

If we now look in Failover Cluster Manager, we can see two VMs - MigratedVM1 which is powered off, and was our original Hyper-V VM, and MigratedVM1 (1) which is powered on, and is our new Azure Stack HCI VM.

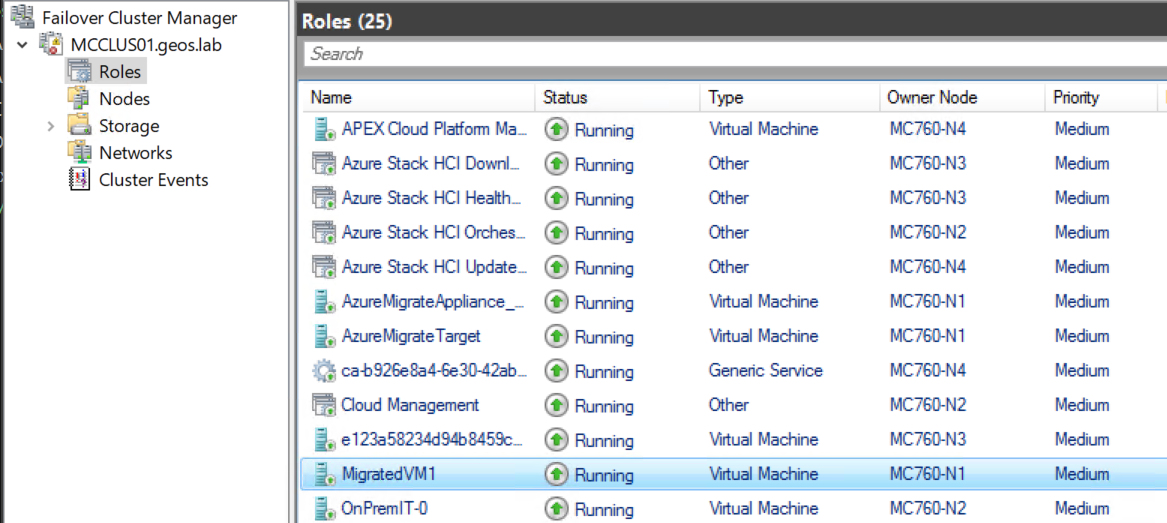

I cleaned this up by removing the old VM from FCM, Hyper-V Manager, and deleting it from disk, and renaming the cluster resource of MigratedVM1 (1) to MigratedVM1.

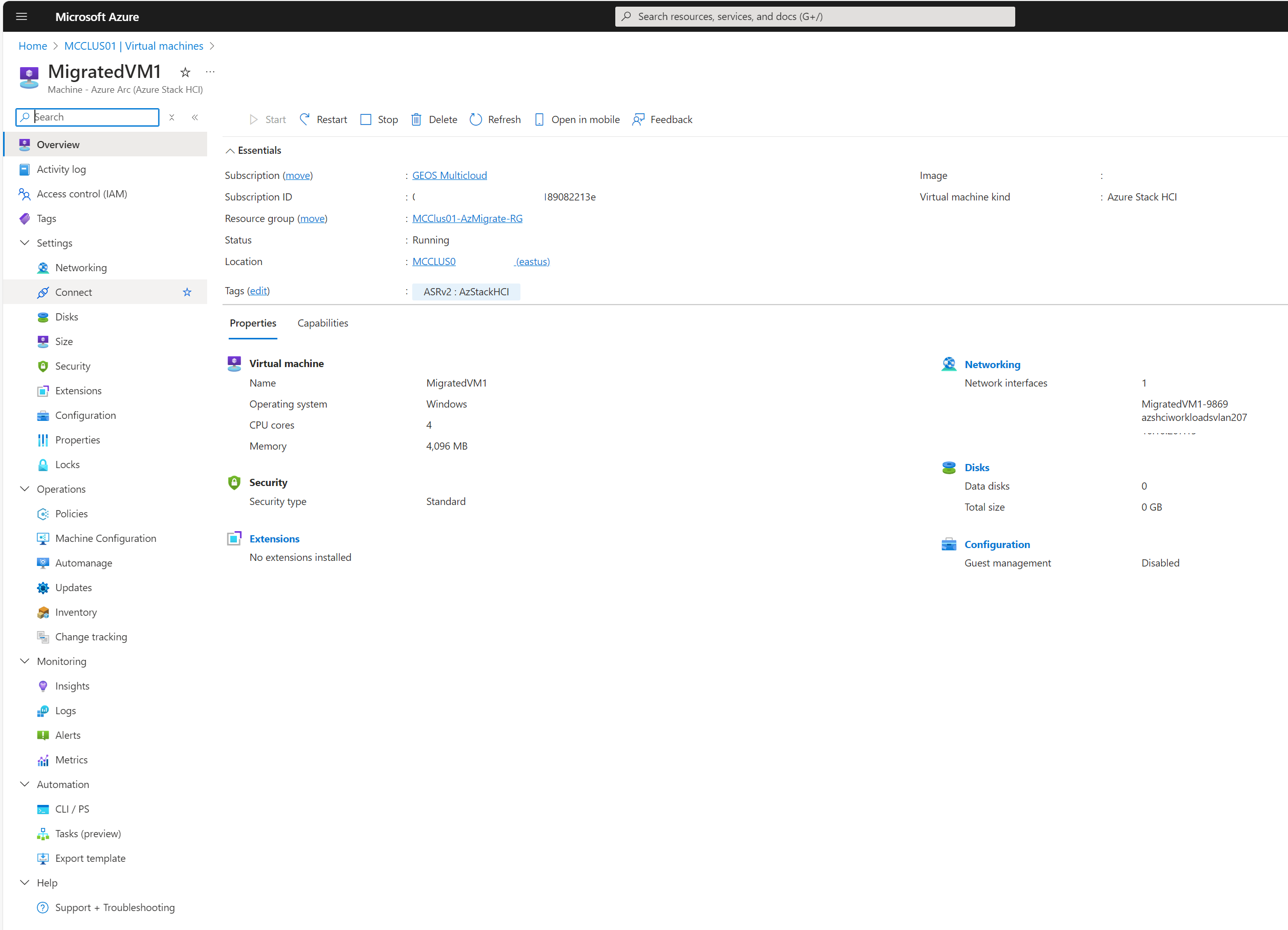

Success! Now when we look at our list of Azure Stack HCI VMs in the Azure Portal, MigratedVM1 is visible.

If we click into the VM, we can see that the Virtual machine kind is Azure Stack HCI, and down the left-hand side all of the management features and functionality we’d associate with an Azure Stack HCI VM are now available for us to use. Migration is successful, and we’ve converted a standard Hyper-V VM into an Azure Stack HCI VM.

The setup of the source and target appliances are the most time-consuming portions of this exercise - luckily they’re a one time activity. Once this piece is done, any newly migrated in Hyper-V VM can be easily converted to an Azure Stack HCI VM with just a few clicks and a short wait.

Hopefully it’s clear why this is such a powerful capability - any migration from a source outside of Hyper-V or VMware will require a mechanism for hydrating Hyper-V VMs into full Azure Stack HCI VMs, and this is the method available for us to do this today. This also enables everyone running Azure Stack HCI 23H2 in production today to start to plan migration of workloads into their cluster, without having to sacrifice features or functionality. I have no doubt that Microsoft will enable this hydration ability with both first and third party software in the future, but in the meantime, this process lets us use familiar migration tools with a small extra conversion step at the end of the process. Excellent.